The computational life of things

Object-oriented programming in the real world

(You can read a condensed version of this article here.)

I never really liked screens. They just shine bright light directly into your eyes. Yet I stare at one for most of the day. Probably you do too. It’s strange when you think about it. Why don’t we all just go outside?

Sure, you can have your device talk to you — AI makes actual conversations increasingly possible. Projection, kinetic movement, brain implants — lots of possibilities. But what if we were to look at everyday, nondigital objects as having some kind of computational power?

You can endow things with such power using sensors, motors and microcontrollers — I’ve been doing this since the 1990s. And this got me thinking whether things actually do this on their own, without any help. It turns out there’s a long history of this idea.

This article is therefore about “object-oriented programming” in the real world. I discuss how I’ve implemented some of these ideas in museum exhibitions, then I deconstruct some programming concepts as ways of talking about real, nondigital objects. I show how we can take computing concepts out of the box and off the screen, then zoom out to look at some philosophical ideas behind objects as active agents, and the political implications of this.

Information architecture

After I learned some skills in physical computing, I worked in a museum creating interactive exhibits. There, my disdain for screens became a cause: I noticed how any sort of screen inevitably attracts the attention of visitors who, presumably, come to the museum to get away from the laptop and TV.

In experimenting with ever-more embodied interactions in the museum gallery, I began to see the whole space as a kind of computer, with inputs (data/content), processing (exhibition design, interactivity), and outputs (exhibitions, visitor learning). I first wrote about this here.

Later, I implemented some of these ideas in an exhibition-experiment with colleagues from University College London. We created a computer model with simulated visitor-agents to predict where real visitors might go and what they might attend to. Then we used projections to influence visitors’ movements, which we then tracked using computer vision.

So the space was literally “programmed” with a set of instructions implemented in a particular environment — just like in the computer. And it was populated and navigated with agents, whether computational or human.

The idea of programming physical spaces can apply not only to controlled environments like museums, but to the whole world. I explore this idea here.

Design for meaning-making

Looking in the opposite direction, I undertook a PhD that explored how museum visitors choose their own paths through a space, and how this impacts what they learn. I channeled it into a framework for design — specifically, designing for how people canlearn in and from museum objects, using technology. That focused on mobile technologies, because by then, everyone was bringing their own tech to the museum. I still managed to get away from the screen, by using audio.

One of my key insights was that in such a design process, the focus should not be on designing a technology as such, but rather on designing an activity that structures people’s use of technology.

In my case, that activity was a trail, an idea that dates back to pre-digital era, which was later adopted as the model for the World Wide Web. I explored how designing a trail for visitors could be done not only by technologists or curators, but by visitors themselves: user-generated content, Web 2.0 translated to Museums 2.0.

In forming this into a framework for design, a key notion was context. Building on the work of other museum theorists, I situated learning in visitors’ personal context — their individual experience, preferences etc; their physical context — the museum setting and all its qualities; and their social context — were they with other people, either in person or remotely via mobile device?

A revelation was that the objects in the museum also had these three types of context. Their social context relates to the time and place of their original created and use. In the gallery they’re decontextualised — this constitutes their physical context, but they are at least surrounded by their friends: similar objects, generally arranged to tell some kind of story.

And the personal context of objects? That’s what separates each individual object from others. Take two of the same type of cup, for example. Each might have been used by different people and in different places, maybe one has a little chip here or there, etc. It’s become a fairly common phrase, but every object tells a story. And putting any two objects together — for example in a sequential trail — creates a new story.

De-computing the world

A lot of my academic career, therefore, has been devoted to bringing computation to physical objects on the one hand, and bringing computational ideas into the nondigital world on the other.

The first idea is widespread — “Internet of Things.” But the second sounds crazy: why think like a computer in a world that’s messy and nonlinear?

One answer is to bring control, predictability and order to complex systems and spaces. Like AI, museums come with a history and epistemology related to classification and preservation of objects as containers of knowledge.

But I’m less interested in control and prediction. Instead I like to embrace chance, randomness and the messiness and absurdity of the world. After my PhD I began teaching in an art school, and we tried to find an alternative to trendy “design thinking” that aims to solve problems and produce commercial products. We wanted to use making, not thinking. And we wanted to raise questions instead of aiming to solve problems, since, arguably, “design” broadly defined has in fact created many more problems than it has solved.

An unlikely answer was to turn to computers. Taking off from that idea of programming the real world, I called it “de-computation”. That means, yes, subtracting computational technology from the real world. But at the same time, embracing the computational capabilities of nondigital objects and environments. So, computational thinking combined with design making.

There’s some science behind the world being a naturally computational place. Biologists already embrace the idea that information (for example in genes) is fundamental to life. Some even propose that the origins of life are algorithmic in nature. Some physicists envision the entire universe as a computer.

It’s easy to see such thinking as technological determinism — the way that in the Renaissance, the universe was viewed as a mechanical clock, the most advanced technology of that time. But let’s stick with it for the moment and see if computation offers anything useful for thinking about the real world.

Back to basics

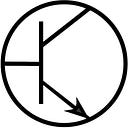

Thinking about object-oriented programming, broadly defined, we should define some basic terms.

First, what is computation? I like Heinz Foerster’s definition: “computing (from com-putare) literally means to reflect, to contemplate (putare) things in concert (com-), without any explicit reference to numerical quantities.” He defines computation as “any operation, not necessarily numerical, that transforms, modifies, re-arranges, or orders observed physical entities, ‘objects’ or their representations, ‘symbols’.”

This fits with with my approach — to consider computing without computers. One computer scientist said, “Computer Science is no more about computers than astronomy is about telescopes.”

What is an object?

If we refer back to René Descartes (“I think, therefore I am”, separation of mind and body), everything outside your mind is an object; you’re the subject. This is an abstract definition: everything you regard is an object of study. This neatly echoes Foerster’s distinction between objects as physical entities and their representations, which can live inside our heads.

Let’s take a computational definition: “a tangible entity that exhibits some well-defined behavior”. It has certain properties (size, shape etc), so it contains data that enables us to interrogate its current state. And if it exhibits “some well-defined behavior,” this implies that it does something, it can execute some procedures or perform some sort of computation. This definition comes from this excellent introduction to object-oriented programming by Grady Booch and co-authors.

Things remember what people forget

Okay, we can agree that an object has properties like its size and shape. But what about that part about behavior, about executing procedures or doing some computation? If an object is just sitting on a shelf, is it actually doing anything?

Let’s look at the most basic case: an object is there or it’s not. If it’s there, something is different. This relates partly to appearance: that golden ceramic cat on my bookcase undoubtedly enhances the aesthetic of the room (in my opinion).

If something is there, in a very simple sense, it does something: it generates a representation in my head. Maybe it triggers a memory or a connection to something else. Every time I look at that ceramic cat, I think of Jack, that really annoying live cat I had at the time I found the ceramic one on the street.

Things remember what people forget. The Iriquois, like many cultures, use objects as mnemonic devices, for sharing and passing down stories and traditions. In my PhD research, people connected with museum objects that resonated with them in some way, whether aesthetically or triggering a personal memory. This prompted them to tell a story that someone else might resonate with, and so on.

The sociologist William Whyte found something slightly different. In his excellent film The Social Life of Small Urban Spaces, he shows how a provocative sculpture placed in a city plaza prompted spontaneous discussions between strangers. That object did something through its mere presence.

Using objects to aid memory has a very long tradition — dating back at least to Ancient Greece, in the technique of memory palace or memory theater. (I discuss that here.)

More prosaically, if I put the trash bag next to the door, I’ll remember to take it out.

So we can say that objects communicate with us. How literally should we take this? I like art critic Roberta Smith’s thinking:

One thing about art objects: they never shut up.

If they survive, they continue to broadcast; they transmit information and spawn experiences that we savor, puzzle over, interpret and judge. With time, real people and actual events fade, but works of art of all disciplines often live to see another day, make a different impression and appear in a new light.

[…]

Every time an art object is put on view in a museum, it is to some extent, no matter how slight, seen anew; its placement in space, its neighbors and even environmental details like lighting and wall color will alter the way it is perceived and thought about. It changes us, it changes the museum, and it changes the art around it.

There is a more precise definition of what objects do, from physics: every body, every atom has mass — and that means it exerts some gravitational pull, no matter how small. In the case of Jack, some sort of weird gravity held me within this cat’s orbit for close to 20 years. But that’s something else; let’s not get into emotional connections.

But Jack’s presence — now absence — raises another point (no, nothing to do with Schrödinger’s Cat). What happens when an object appears or disappears? If you’ve ever been around small children, one of the first games they like to play is peek-a-boo: you can see the irrational joy they get when you alternately hide and show yourself or some object.

The developmental psychologist Jean Piaget studied this, and noticed that kids less than around eight months old seem to believe that when you hide something, it’s gone forever — they won’t look for it. At around eight months, they start to look for it. This learned behavior is called counterfactual reasoning. (This is discussed in more detail here — sorry, paywall.)

Methods of intent

So now I’ve given you lots of evidence for what objects can do to people, without actually doing anything except existing. To sum up so far, objects contain some sort of data related to their individual attributes (their personal context). They exist within, and can affect, a physical context. And they do something — they have some functionality. Let’s explore this functionality a bit further.

First, consider the computer as belonging to a special class of object, a class of objects that functions more explicitly: it can represent other kinds of objects using binary logic (ones and zeros), can manipulate these representations, and it can perform operations on objects, and operations using objects.

In JavaScript for example, digital objects are containers for properties (their attributes) and methods (functions). Their properties remain consistent over time, enabling us to classify them into categories. Each individual property, however, can vary. My golden cat has a property we could call “shininess”, but I would characterise it as not completely shiny; if I had to quantify that property I might give it 60 out of 100. Jack had the property of “annoyingness”, and I would rate that 100.

An object’s methods can be invoked (called) at different times. What exactly are these methods? Recall Foerster’s definition of computation: “any operation, not necessarily numerical, that transforms, modifies, re-arranges, or orders observed physical entities, ‘objects’ or their representations, ‘symbols’.” So methods can include transformation, modification, rearrangement, or ordering.

Those can apply to real objects in the real world, or to their digital representations. Simulating the messy real world within the computer is a lot easier, and lets us test out different approaches before applying these changes in the world.

For example, if I wanted to transform my golden cat into a golden rabbit, I could start by extending its ears, perhaps using clay. (This strange example comes to mind because my brother actually created such a cat/rabbit.) I could simulate that in the computer before the messy, resource-intensive real-world work, to see how it might look.

This is what object-oriented technology was developed for: “to allow developers to represent the real world without changing paradigms” according to this article. It’s a way of managing complexity and building systems for the real world.

I say object-oriented technology because it’s not only a form of programming, but can be used to analyze existing objects or design new ones. To do that, you first extract or define the data (attributes) and functions of a (real or imagined) object. What kind of data could be input and output, and how? What does it do, what do you want it to do? Then you draw, diagram, plan (for example using pseudo code) and simulate (for example in Wizard of Oz-style).

The outside view

Interestingly, Booch et al consider this type of design as both science and art. Accordingly, both of these fields use a strategy of abstraction to investigate and communicate (I discuss this in more detail here). The authors define abstraction as “a simplified description, or specification, of a system that emphasizes some of the system’s details or properties while suppressing others.” It’s easy to see this applied to art, which can highlight some qualities of a topic while holding back on others.

While each object has its own attributes and functions, “abstraction focuses on the outside view of an object and so serves to separate an object’s essential behavior from its implementation,” the authors write. They also add, “We like to use an additional principle that we call the principle of least astonishment, through which an abstraction captures the entire behavior of some object, no more and no less, and offers no surprises or side effects that go beyond the scope of the abstraction.”

The golden cat is, of course, an abstract representation of a live cat. I recently wrote about McKenzie Wark’s Hacker Manifesto, which contains a whole section on abstraction. She writes, “Abstraction is always an abstraction of nature, a process that creates nature’s double, a second nature, a space of human existence in which collective life dwells among its own products and comes to take the environment it produces to be natural.”

A digital representation of the golden cat — such as the imageI included above — is then further abstracted from its ceramic form. Each of these objects has different attributes and functions: The ceramic one brightens the room and reminds me of Jack; the digital one brightens your day (I hope) and can be copied infinitely without degradation.

Cat class

Abstraction does something else important. Because it only has only some essential features of “catness”, it acts as a kind of symbol to represent all cats. That is, the class or category of cats in general. The shape of the golden cat still reminds me of Jack, who was black and white.

Scott McCloud brilliantly illustrates this in his must-have book Understanding Comics (hint — it’s not just about comics). He shows, visually, how a detailed drawing of a person’s face represents only one single individual, but the more you remove details — to the point where it’s a smiley with two dots and a line — it comes to represent the class of all people.

Or, as Booch et al write, “abstraction arises from a recognition of similarities between certain objects, situations, or processes in the real world, and the decision to concentrate upon these similarities and to ignore for the time being the differences.”

The idea of abstracting the qualities of things in order to put them into categories is exactly what contemporary AI systems do. Classification involves discrimination (between classes), and hierarchy: Jack belongs to the class of black-and-white cats, which in turn belongs to the class of all cats.

Booch et al illustrate this hierarchy humorously with a cartoon of a group of architects designing a “Trojan Cat Building,” each architect working on a particular component: the “bird aggravation system”, “purring system” and so on.

Classification is also inherently subjective. Booch et al illustrate this nicely with another cartoon, of a cat which is observed by a veterinarian and (what I would classify as) a granny. The vet looks at the cat and sees its anatomy; the granny sees a ball of fur that purrs. As the authors write, “An abstraction denotes the essential characteristics of an object that distinguish it from all other kinds of objects and thus provide crisply defined conceptual boundaries, relative to the perspective of the viewer.”

Whoever creates the categories, this allows us to consider objects as part of classes. In the computer this means that if you define a class of objects, you can magically create many instances of objects of a particular class, and each of those objects might have the same properties but vary in their expression of those properties. Instances of the class “cat” with the property “color” could be golden or black-and-white or whatever colour.

Objects in action

I put the computer into a special class of objects that can manipulate other objects or their representations, by performing operations on, or using, objects. We could also place people or cats into this class. In the case of cats, we don’t know about their internal representations of things, but they clearly use and perform operations on objects (like my sofa).

We’ve seen how objects have properties and functions, how they can be abstracted into classes. Now let’s look at how we can actually use them for programming.

Booch et al “view the world as a set of autonomous agents that collaborate to perform some higher-level behavior.” Objects, they write, “collaborate to achieve some higher order outcome.”

How do objects collaborate? Through instructions and communication. A program is defined as a sequence of operations — instructions arranged like a recipe. (Computing is defined nicely here.) You can look at this as a sort of theatrical staging or choreography.

We could say that a program, as a set of instructions, is a special class of the larger class of languages — one that can be “run” to do something. It translates theory into practice and affects things in the (digital or physical) world. Some linguists argue that all language is capable of prompting action, through “speech acts”.

What sorts of instructions or operations can objects carry out? Here’s a few examples, from the Processing programming environment:

• Boolean operations: Boolean logic (check Wikipedia on George Boole and his ideas — super interesting) is a binary choice, typically a value which is true or false; numerically, one or zero. In the real world (especially at the quantum level), the question of what is true or false, fact or fiction, becomes fuzzy. Fake news anyone?

• If/then: allows a program to make a decision. I said above that a program is a sequence, implying a linear sequence; this sort of branching algorithm enables it to go in different directions, or to jump to somewhere else then return. If x then y: in the real world, it becomes a general life question.

• createImage(): In Processing, this creates an image object (a container for storing digital images). “This provides a fresh buffer of pixels to play with,” says the reference for this command. Here we can consider a scene to be a collection of points in space and time to be played with. What does this mean off of the screen?

• Save: In Processing, this command lets you save an image from the environment (display window) to your device. Here we could make an analogy with a museum visitor taking a picture — thinking computationally would mean describing the actions, format, and display area of the image to be captured. Framing becomes a subjective issue.

Objects’ performance and collaboration takes place in some physical context — an environment, whether digital as in a computer simulation, or physical as in a museum exhibition. Theater is again a good analogy: in a museum, people shuffle past fixed objects, whereas in a theater, the people are fixed and the action moves across the stage. (Memory theater actually inverts this.)

As well as in a spatial context, programming takes place over time — the term “processing” contains the word “process”. In computation, time as applied to objects can be separated into states and events — one more persistent, the other punctual. This also works in the real world — for example when observing the behaviour of people (example here).

The essence of objects

So far I’ve taken some concepts from computer programming and applied them to the real world, to show that objects can be shown to have more agency than we traditionally give them. Now I will zoom out to look at a few ideas from philosophy, in order to interrogate this supposed agency, and see how useful and applicable it might be as a way of thinking and acting.

First, let’s return to the notion of classification. Booch et al abstract an object’s “essential behaviour” in order to classify it. Viewing the essence of things, instead of their mere existence, becomes a metaphysical question. It’s one thing to say the golden cat exists or doesn’t; it’s another to classify it as either golden or a cat.

This points to the power (or failure) of language. Its very nature, and usefulness, is to classify things and communicate through shared meanings. But again, classification is subjective. Is a static ceramic thing really a “cat”? What about one with rabbit ears?

There is a type of language that resists classification by using multiple meanings and twisting words around: poetry. I discuss these ideas in greater depth here.

Secret agents

If we push back against the hegemony of human language and classification, we might start to regard objects as more equal to people. Consider, for example, how some “natural” things such as forests, and some “artificial” ones such as companies, have secured legal rights as individuals (while recognizing that the distinction between natural and artificial is itself a binary classification, and a problematic one when you look at it too closely).

A world where the human is de-centered and placed on equal terms with nonhuman things is called, in philosophy, a flat ontology, or in a more specific version, object-oriented ontology. It assumes that objects have some agency, and this in turn implies some kind of self-efficacy: a capacity to act to secure desirable outcomes, or avoid undesirable ones.

What is “desirable” then becomes subjective, whether it’s desirable from the object’s own perspective or it’s observed externally. I like to say that cars, for example, “like” to go fast, since that’s what they’re built for. This implies that when there’s an auto accident, responsibility might be shared by the manufacturer. (Doubly so with self-driving cars, and AI systems more generally.)

Cats have an advantage over cars, because as living things, they “naturally” have, like humans, urges to survive, eat, reproduce etc. The fear about AI is that it somehow achieves such agency.

It’s important to add that bestowing agency onto nonhuman things is not only done by Western academic philosophers, but is part of many cultural traditions. Some cultures in the Amazon River basin, for example, view animals, rocks and weather systems as completely human (more specifically, those things see themselves as human — Eduardo Viveiros de Castro discusses this). This way of thinking is generally termed animist, as in an object is animated from within — it has a soul, spirit or other life force. (For more, see this article.)

The implication of this line of thought, according to anthropologist Tim Ingold, is that “if agency is imaginatively bestowed on things, then they can start acting like people. They can ‘act back’, inducing persons in their vicinity to do what they otherwise might not.” He prefers to locate the agency of objects not in a spirit or soul — for that is something we impose or imagine — but as something that arises from their material properties.

This way of looking at agency is relational — the agency lies not inside the object or is bestowed by humans, but it’s in the link between them. In my PhD, I located learning (“meaning making” to be precise) exactly in the space between where visitor and object meet. In terms of the golden cat, meaning lives in that intersection between us.

Consider the quasi-object described by philosopher Michel Serres — a lump of dough for example: not quite solid or liquid, constantly changing its shape, each point in its topology changing position, it becomes an object-event (to use a term from another philosopher, Gilles Deleuze). Such objects, according to curator Noam Segal, “function as a mediator between us and the world: They act for us, on behalf of us, incorporating and transmitting our agency, almost as extensions of humans. Serres considered the iPhone such a quasi-object, and lustfully, it is.”

There are no things

What if we take this idea further to say that relations, or interactions over time, are all there is. Sounds crazy, but again at the quantum level this seems to be the case: physicist Niels Bohr believed that what we think of as an object (an atom in his case) is a property of observed interactions between entities with no fixed position: down there, it’s constant movement.

If we take that at face value, this means that every “thing” in the world — living or not — is made of smaller things in constant motion. Nothing is fixed, everything is temporary and in constant flux: even the most stable things we might think of (rocks for example) emerge and break down over some timescale.

What’s more, biology tells us that humans are made up of more nonhuman microbes than uniquely human ones. Each of us is not, therefore, a single individual. According to social scientist John Law:

Perhaps we should imagine that we are in a world of fractional objects. A fractional object would be an object that was more than one and less than many. The metaphor draws on an elementary version of fractal mathematics. Thus a fractal line is one that occupies more than one dimension but less than two.

Maybe we should go even further, to forget objects altogether. That was what J.J. Gibson proposed. He was an environmental psychologist, and urged us to look instead at surfaces, substances and medium. What we call a “detached object,” he writes, “refers to a layout of surfaces completely surrounded by the medium. It is the inverse of a complete enclosure. The surfaces of a detached object all face outward, not inward.”

The implication is that, when we consider a object in motion, this “is always a change in the overall surface layout, a change in the shape of the environment in some sense.” Recall that point above about how objects affect their environment: he takes this quite literally. (Fuller discussion about Gibson here.)

The political life of the object

In art from the 1960s onward, there was a sort of dissolution of the object: curator Jack Burnham looked instead to systems, and Lucy Lippard chronicled the de-materialization of the object in favour of the situation.

I believe that artists are always a step ahead of everyone else (even if they articulate their ideas in poetic, not rational, ways). And this points to a broader shift of focus from objects to subjects. It’s the most obvious dichotomy in the English language, but often overlooked elsewhere: where there are objects, there must be subjects.

I discussed subjectivity, and we could look at its mirror-image, objectification. Politically speaking, that’s been applied to a wide range of human subjects. It implies treating humans as passive objects. If we flip that around, and assign some agency to objects, maybe they then become subjects. Cue Alexa, Siri and their growing cohort of AI actors.

Let’s zoom out further and look again at programming. It’s overly simplistic to say that technology is programming people, because we don’t want to objectify people as passive containers of attributes and functions; and conversely, “technology” never stands alone monolithically — there are always human subject behind it: its agency is indeed distributed.

If we consider that programming equals processing equals production, then Wark’s statement, in her Hacker Manifesto, makes sense: “Production meshes objects and subjects, breaking their envelopes, blurring their identities, blending each into new formation.”

Then we turn back to the idea of classification. Politically speaking, class means social class. Sergei Tetryakov was a politically-engaged scientist in pre-World War II Russia, and in his Biography of the Object, an object is the narrator, describing its life-cycle, how it was produced, and social relations underlying such production. Remember that line, “every object tells a story”?

Flipping things around, then, could reveal some hidden forces at work, or less conspiratorially speaking, some things that we take for granted. For example, philosopher Jean Baudrillard’s The System of Objects proposes that we have come to define our identities and social hierarchies through the objects we purchase. This is relational, as the title implies: a network of interconnected objects create a structured environment influencing human behavior and desires. Objectification indeed.

Things to think with

I acknowledge these critical perspectives about objects, systems, agency and subjectivity. I even agree with many of them. But I do still think the notion of the object has value: as a binary opposition, the subject-object dichotomy exposes a certain mindset behind it. And in that sense, objects — any object — becomes, like an aide-de-memoire or like William Whyte’s sculpture in urban space, a focal point for consideration and discussion.

This is why I like applying computational ideas to the world outside the computer: not to literally program people or spaces or objects in an instrumental way, but to expose the thinking that goes into the box.

Symbols are more precise than words for describing objects. But when complete logic meets humans and other nonlinear systems, randomness and absurdity results.

And we might want to preserve some mystery about what’s inside the computer, the AI system — or any object. Have you ever seen a “Mexican jumping bean”? It quixotically moves on its own. When the Surrealist poet André Breton was first shown one, he came up with many — often fanciful — explanations.

When “the truth” was finally explained to him, it was a letdown. Once you discover such a mechanism, you shut down all other possibilities, including poetic ones. In the age of AI and existential crisis, I hope there’s still a place for wonder, curiosity, and the “defamiliarization” that the Surrealists and other artists do so well.

Further reading

I’ve put lots of links in the text, but here’s more:

Objectivity: A Designer’s Book of Curious Tools

If you want regular articles sent to you via email, subscribe for free here.