Information in attention

A computational view of information as an active entity.

This is the third in a series of articles about information (here are the first and second). I am collecting all my notes from years of teaching into these informal articles, and updating them as new information comes in and as my views change.

“Attention is the oxygen of information. Without attention information is dead.” In this article, I will take this quote from Walter Van de Velde [1] as a starting point to ask what it means to regard information as alive. If information is nourished or sustained by attention and takes on some kind of agency, how does it use this attentional energy for some kind of activity? And what are humans’ role in this? I start by describing attentional mechanisms in human perception and cognition, then move on from what information is to what it does, its role as an actor in human society, and the implications of ascribing such agency to it.

Selective (in)attention

Exactly how do we attend to a piece of information? In two previous articles I have defined information as a fundamental physical and biological entity in terms of energy, difference and pattern. Here I expand on its subjective character, as experienced, interpreted and acted upon by humans and others.

We should first differentiate between human perception and cognition. In the last article I defined information in terms of differences in waves of energy in the environment. We perceive these differences as stimuli to our senses. Even in a dark, quiet place, there are a lot of things we can attend to at any given time — perception refers to external sights, sounds, smells etc; and interoception refers to what’s going on within our bodies. We take in all these phenomena without thinking about them, and we can consciously attend to those we choose. I will focus on external perception, not interoception, to keep us focused on information as an external entity.

Perceptual load theory presumes a limited capacity for what we can attend to at once. If there’s too much to take in, we can passively disregard things we’re not interested in. (I will define ‘interest’ below.) If there’s not a lot competing for our attention, we can actively ignore some things.

Conversely, we can also actively attend to more than one thing at once, either by switching our attention from one thing to another (multitasking), or by subtly monitoring background activity while we’re focused primarily on one thing — this is what Linda Stone calls continuous partial attention. Notifications are on; your phone is next to your laptop and you notice when it sounds or lights up.

Note here that one of these methods involves switching between things over time, and the other involves simultaneous perception (or rather, what Stone calls ‘semi-sync,’ as in not quite synchronous). Note also that both of these presume some active control over our attention, and according to perceptual load theory, that’s easier when perceptual load is low — when there’s not too much competing for our attention. Such active control relies on what scientists call “higher cognitive functions” — those that evolved later in our evolutionary history than mere perception, and accordingly reside more in the newer, outermost parts of our brains.

This is where cognitive load theory, developed by John Sweller and others, comes in, and the main higher brain function it is concerned with is memory — which can be divided into short-term or working memory, and long-term memory. This theory assumes that we process the information we perceive first in working memory, then store some in long-term memory. Note the obvious computational analogy used; this approach also uses the term “cognitive architecture.”

Like perceptual load, cognitive load theory is also concerned with capacity and duration limits, though here these only apply to new information: According to Sweller et al, “A ready availability of large amounts of organised information from long-term memory results in working memory effectively having no known limits when dealing with such information.” The prime example here is chess, where expertise is seen not as having a complex mental model, but simply having seen many configurations of the board, and holding these in long-term memory.

There is an implicit assumption here that processing in working memory is more active than long-term storage, and therefore takes more conscious effort, or we could say it literally consumes more energy. And when we store something in long-term memory, it frees up working memory to focus on something else. This indeed applies to computers as well as humans. In fact, the capacity of working memory has been quantified: it’s now well-known that we can hold about seven individual bits of new information in our heads at once.

But according to cognitive load theory, this depends on the intrinsic complexity of the information, and the human who’s processing it: if you’re a native English speaker, you can probably take in each of these words more easily than a non-native speaker. It also depends on how the information is presented, and the amount of working memory needed to process it.

This raises the question: What is a bit or element of information? We know the specific definition of this when it comes to computers — from binary on/off states of bits to bytes that contain eight bits, and so on. For humans, we could specify individual words, as above, or we could take a more computational view: Csikszentmihalyi (best known for the concept of “flow”) has estimated our attentional capacity at 126 bits per second; when we attend to one thing over another, this constitutes one bit of information, with an “attentional unit” equal to 1/18th of a second.

Cognitive load theory is devoted to optimising human learning by applying cognitive science to instructional design: “Because it is novel,” write Sweller et al, “[new] information must be presented in a manner that takes into account the limitations of working memory when dealing with novel information.” This associates learning with instruction, problem-solving and memorisation of information, and is concerned with measurement and optimisation. Applied to the design of learning materials, it feeds into some practical design principles, such as how and when to use text versus pictures, where and how much of each should be presented.

Perceptual intelligence, computational creativity

But we’re not computers. Human learning is not the mere accumulation of information in memory, and not everything can be broken down to individual “information elements.” Think of learning to ride a bicycle or drive a car — millions of tiny movements are not consciously processed, recalled and recombined. The body plays a role here, and an important corollary is that some processing seems to take place outside the brain, in our arms and legs, and indeed in every cell of our bodies.

Evidence for this comes from an unlikely source: bugs. Scientists attached small rockets to cockroaches (!) and found that the insects can correct their movements very quickly — faster than it would take for a signal to go from leg to brain and back. That indicates a feedback mechanism located in the legs themselves. There is some evidence for something similar in humans, and for what is commonly called muscle memory.

In fact, these findings are now applied to computers — particularly in robots to quickly correct their movements to maintain stability. “Smart” objects have embedded sensors and processors to recognise and communicate with people, surroundings and other objects. This has been thought of as giving things “perceptual intelligence,” and we can see both benefits and dangers of doing this.

With ever faster and smaller processors, sophisticated computation (analogous to higher cognitive processing in the brain) can now take place at the edges of a network, not only in centralised servers—just as in cockroaches. This means a huge increase in complexity, prompting us to revise some of our longstanding models of centralised control — in both biological and computational systems. Again, this comes with both benefits and potentially significant risks as much of this wiring of the world is proceeding without our realising it, in many cases by commercial interests interested more in short-term financial gain rather than long-term effects on people and the planet.

Beyond biology, learning also lives in creativity — not only accumulating static elements of information but recombining them in new ways. This also applies to machine learning: going back to the chess example, chess-playing computers not only can store many board configurations, enabling them to predict many advance moves and scenarios; they also seem to come up with novel solutions. (In this article I discuss machine learning as a new form of knowledge.)

Against processing

Let me now give a counterpoint. In previous articles in this series, I’ve discussed the work of James J. Gibson. In his version of perceptual intelligence, or what he calls the theory of information pickup, perception should not be separated from processing, as I did above. There isn’t a point where we stop sensing and start memorising, he says. Instead, we simply pick up, or perceive, what he calls invariants in the environment—things that don’t change—against those that do. We do this unconsciously and seamlessly within a singular perceptual system. By separating out memorised or internalised knowledge, he believes, we falsely assume that our knowledge of the world already exists somehow; instead, we constantly perceive persistence and change, and as we continue to do this, we learn over time.

Perception, in Gibson’s view, is not a series of stimulus events like messages communicated to our individual sense receptors, causing an effect inside us if they reach a certain threshold; perception is instead an act of information pickup, done be a whole perceptual system at once and in a circular process, even in the absence of any information, which is always available to us in ambient light, vibration, contact, and chemical action.

Information, then, in this way of thinking, is never exhausted—the more closely we scrutinise the world, the more information we can pick up. This contrasts with the computational approach. “Knowledge of this sort does not ‘come from’ anywhere,” he says, “it is got by looking, along with listening, feeling, smelling, and tasting.” Attention, then, is not something only in our brain—it resides in the whole input-output loop of the perceptual system. As such, it’s less like a spotlight we shine onto the world and more like a skill, and one that we can improve over time.

Attention poverty

Armed with this knowledge of what people and computers can do with information, we can now return to the question of what information can do with people and things, whether information itself can be viewed as somehow alive. As something intangible, it is a kind of ingredient that is exchanged and processed; it can’t undertake any sort of computation itself, right?

The first thing to say here is that there’s a lot of information around. We’ve seen that we selectively perceive, process and store information, and just as in nature, selection implies some sort of competition between bits of information. Herbert Simon goes further to say that not only do we consume information, but it too consumes:

In an information-rich world, the wealth of information means a dearth of something else: a scarcity of whatever it is that information consumes. What information consumes is rather obvious: it consumes the attention of its recipients. Hence a wealth of information creates a poverty of attention and a need to allocate that attention efficiently among the overabundance of information sources that might consume it.

FOCUS, you tell yourself. You’re conditioned by evolution to notice a tiny movement in your peripheral vision — it might be a predator. Your cortisol spikes with every notification, since we’re attracted by novelty; oxytocin increases in seeking the attention of others. Viewing certain kinds of images provokes the release of chemicals and physical changes in our brains and bodies. Information has biological effects on us.

BREATHE, Linda Stone tells us. When checking our email or social media, we tend to hold our breath in anticipation of that hormone hit, that informational bit of good or bad news. Breathe, says Franco Berardi: “When things start to flow so fast that the human brain grows unable to elaborate the meaning of information, we enter the condition of chaos.” Don’t focus on the flow, he says, but on your breath.

Going back to perceptual load theory, our attentional capacity is limited. As a limited resource, it becomes a kind of currency, as Herbert Simon points out. This is reflected in our language: We pay attention as if it’s money.

This is the premise of the online “attention economy” — that attention translates directly into monetary profit. Therefore, a financial view (or even a personal one, in the way we treat social media) is premised on attracting “eyeballs” to your content. Like many metaphors, this one has a basis in reality. Jonathan Crary links such commercial competition to biology:

Even as a contemporary colloquialism, the term “eyeballs” for the site of control repositions human vision as a motor activity that can be subjected to external direction or stimuli. The goal is to refine the capacity to localize the eye’s movement on or within highly targeted sites or points of interest. The eye is dislodged from the realm of optics and made into an intermediary element of a circuit whose end result is always a motor response of the body to electronic solicitation.

The eyeball is, after all, the only part of our brain that’s directly exposed to the world. So we could say that when we view information, more than when we hear, touch or taste it, we’re making a direct connection between mind and world.

Information in action

If information affects us so directly and physically, what effects does this have on us? In the second article in this series, I mentioned the environmental psychologist J.J. Gibson, who detailed the role of information in a natural environment — specifically how it affords action. In particular, his theory of affordances is about perceived actions we can take in response to information we encounter. He separates the natural environment into medium, surfaces and substances. A surface of about the height of our knees affords sitting; an enclosed space affords shelter; a stick smaller than human size affords all sorts of uses — we call such particularly useful objects tools.

Gibson’s theory has been translated into the digital environment, primarily by Don Norman, who calls the buttons, colours, links and other things we encounter on screens “perceived affordances”. Think again how movement on a screen attracts our attention, for example.

Affordances refers to a certain kind of information: the qualities or characteristics, not the semantic content, of things. So a word on a web page that’s underlined or differently coloured from its surrounding text carries a different kind of meaning than what it spells out. This extends to typography: different typefaces have been shown to prompt differences in how the words are perceived.

So there are different types of information we can glean from a scene, whether in the world or on-screen, and both types can prompt actions by us. Information in the form of computer code is a third type: it is language that prompts action when a program is run (or we could say, when it is perceived by a computer)—it not only affords possible actions which may or may not be taken by the perceiver, but directly causes specified actions to happen (technically, it performs “functions” or “operations,” consisting of “instructions” or “statements” which are “executed”). This type of information is deterministic. Note the similarity with the use of “instruction” applied to human learning in cognitive load theory.

To answer the question of whether information is alive, then, we might place it in the same position of a virus — something with some agency, that can cause physical changes in a body (even a social body, in the case of viral information) — but, while it requires attention-as-oxygen to sustain and reproduce itself in a process analogous to biological evolution, lacking some vital bodily organs it is not technically alive. (See this article for more on viruses and information.)

Van de Velde sees information in a layer that links the real and virtual worlds. Indeed, he sees no need to link these respective topological spaces, whether by giving human users a presence in the virtual world (as in virtual reality), nor imposing digital data onto the physical world (augmented reality). Both of these scenarios create the information overload that Herbert Simon warns of; Van de Velde instead sees agents, both human and digital, sharing information — information simply mediates between these two worlds.

Behaviour landscapes

We can now return to Van de Velde’s quote at the top of this article, and expand it: “Attention is the oxygen of information. Without attention information is dead. With attention it influences action.”

Van de Velde was writing at the start of this century, at the same time the notion of “perceptual intelligence” was formulated. And just as perceptual intelligence refers to formerly inanimate objects, Van de Velde was talking about endowing a kind of intelligence to nonhuman things through computation. Attention, therefore, now applies not only to humans, and a couple of decades later, we now know that this brings threats to privacy as well as benefits to commerce.

Specifically, Van de Velde was interested in how “smart objects” could influence our behaviour — by simply giving us advice: “Our idea is to view a piece of advice as entailing an expectation on future behavior, and to track user behavior in order to evaluate the effectiveness of the advice.” He describes a parrot-like device that might sit on someone’s shoulder and whisper in their ear, having information about the person’s spatial, temporal and social contexts. There’s someone nearby that the parrot recognises — do you want to meet them or avoid them? There’s an event happening nearby that might interest you, based on what the parrot knows about your interests and past behaviour — will you drop in? A computer could assign statistical probabilities to how you might act on such advice, and by tracking what you do in response, refine its predictive models.

At this point you will realise that systems like this are now in place, in the form of Amazon recommendations, Google search results, location tracking on smartphones.

What does it mean to be “interested” in something? For Van de Velde, an interest is simply a resource for behaviour, different from a goal or a task. Perceptual load theory and continuous partial attention are specifically targeted at goal-directed behaviour. Goals, according to Van de Velde, “are just means to an end, the end being behavior.” Interests, then, “do not need to be measurable except through the successfulness of the behaviors which they enable.” He continues:

we represent an interest as a data structure with a measurable satisfaction function defined on it. An interest represents the pursuit of a resource for behavior, but it leaves in the middle what that behavior is. Thus, an interest is neither a goal nor a task. It is not limited in time, neither is it something reachable.

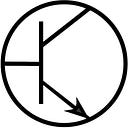

Turning from interests to behaviours, Van de Velde then maps out possible behaviours in a topology if branching decisions (see the diagram at the top of this article). Recall here Csikszentmihalyi’s definition of a decision as one bit of information. Applying such an idea to a whole “behaviour landscape,” Van de Velde thereby sees the whole world as a computer — one that we program by influencing each individual, attentional decision. In so doing, it computes individual futures, and thereby our collective future.

Conclusion: A computational dialectic

Seeing information as alive is to view it as a virus — spreading, infecting, influencing biological, digital or organisational agents who may have shared or conflicting interests. As I detailed in this article, it has a very real, material basis — real viruses exchange information in the form of DNA, colliding atoms exchange electrons or spin states, information resides in physical forms in brains and hard drives. At human scale, it affords actions and changes behaviour.

Culture thus amounts to information itself (explicitly for example in ‘memes’). The sociologist Alex Preda defines information as follows:

At the level of social processes, information can be seen at least as: (a) patterns of communication, broadly understood; (b) forms of control and feedback; (c) the probability of a message being transmitted through a channel; (d) the content of a cognitive state; (e) the meaning of specific linguistic forms

All of these have been discussed in this article and the previous two. Van de Velde takes a computational perspective; cognitive science takes a practical, goal-oriented view; Simon (like Dennett) approaches it in terms of evolution; Preda comes at it from economics, interested in markets and uncertainties and transactions.

Transactions, according to Paul Miller, are “a computational dialectic.” In a future article in this series, I will take a more critical perspective toward approaches to information which focus on productivity, rationality and commerce, as Crary does:

corporate success will also be measured by the amount of information that can be extracted, accumulated, and used to predict and modify the behavior of any individual with a digital identity

Sometimes we might not have explicit goals; sometimes we’re not even sure what task to focus on. Sometimes we might want to drift, browse, reflect, look around, stay open to new things. As Miller says, “When your attn is occupied, someone else takes your place. You wear a sign saying, ‘Hello, I’m not here right now.’”

Read the final article in this series.

Notes

- Van de Velde, W. (2003) The world as computer. Proceedings of the Smart Objects Conference, Grenoble.