How we learn through conversations

And how machines could too

You’ve probably used an AI chatbot by now. Typically, you ask it a question and it provides answers. But has one ever asked you a question, besides “What can I help you with?”

Chatbots and conversational AI agents are widespread, but because they’re almost all intended to replace people in customer service roles, they’re very limited in what they can do. Typically they are simply a slightly more friendly way of answering frequently asked questions, as in IBM’s approach.

I think there is much more potential, because (1) conversations are one of the main ways that people learn, and (2) human conversations are usually much richer than question-response pairs. I’ve been creating a new kind of conversational AI system aimed at helping both people and machines to learn, and in this article I detail how it works, how conversations are about much more than language, and what “learning” means anyway — whether you’re a human or a machine.

But what do you mean?

I’ll start by giving a working definition of learning, since that’s the goal. I had to do this as part of my PhD research, which was situated in an institute of education. At first I wasn’t sure what a PhD in Education would do for me, but I quickly realised that actually, learning is at the centre of everything — every field, every human. Learning is part of our growth and development, as well as the ongoing evolution of every scientific and artistic endeavour. And sure enough, this has served me well as I move between different areas of practice and research.

And now, learning is central to what computers do, too. Though, as Alan Blackwellreminds us, “‘learning’ is only a poetic analogy to human learning, even when done by ‘neural networks’ that while poetically named, in reality have very little resemblance to the anatomy of the human brain.” I agree with that, but my point is that when it comes to humans, I’m not only talking about what goes on in the brain.

Given that, could all that we’ve learned about human learning be applied to create better AI systems? And conversely, could innovations in machine learning be applied back to human learning? These have been my guiding questions in this research.

In terms of human education, the notion that learning is about accumulating facts is outdated. Students are no longer considered empty vessels in which teachers deposit knowledge. Nor are they like plants that teachers tend to in order to facilitate growth. After more than a century of research on learning, most education theorists regard people of all ages and life stages as playing an active role in what and how they learn. This is called constructivism. More than that, everyone uses different styles of learning, and possesses multiple “intelligences” that involve their senses and bodies, not only their minds.

I find the term “learning” to be too broad to be useful for a specific research project. It can mean so many things: growth or development, learning to tie your shoes, learning mathematics, unsupervised machine learning. I think many people also implicitly associate it with schools and formal education — and now with machines.

So in my PhD I replaced every instance of the word “learning” with meaning making. That gave me something specific to look at, and look for. A noun and a verb. It lets me define more specifically what “meaning” is in a particular context, how it might be constructed, and by whom.

At the same time, it’s also broader than “learning”. You find certain activities meaningful. You may find yourself wondering about the meaning of life. You might ask, “What do you mean?”

That last question gets at something interesting. Meaning often comes from dialogue, mutual understanding, shared interpretations. The philosopher Franco Berardi writes, “Meaning is interpersonal interpretation, a shared pathway of consciousness.” Conversations often loop back and forth, as you converge on a meaning. Meaning doesn’t come from somewhere out in the world; we participate in its making. It comes from within us, but also, often from other people too.

For the remainder of this article, I will use both “learning” and “meaning making” — sometimes interchangeably for convenience, sometimes to refer to specific approaches or aspects.

Conversations, in theory

Speaking of looping, some insights about conversations actually came from artificial intelligence research — in its early days. They were then applied to human learning. And then back to machines. Then back to humans. Stay with me, it’ll become clear.

In the 1960s and 70s, Gordon Pask drew from developments in early AI, and came to regard conversation as central to learning, since like me, he was interested in how both humans and machines learn. His conversation theory was “an attempt to investigate the learning of realistically complex subject matter under controlled conditions.” [source]

“Controlled conditions” means restricting his focus to topics that could be conceptualised with formal relations, strict definitions, and in specific conditions. It’s easy to study whether a human or machine can learn mathematics because there are clear right and wrong answers.

Conversations in Pask’s model are also assymmetrical, taking place between a learner and a teacher or experimenter, with a computer used to support the teacher or experimenter by recording these. Evidence of learning (the meanings in meaning making) was located in the spoken or written responses of the learner, or from the learner’s observed use of a device or some other materials used to undertake appropriate actions — say, learning to use a scientific tool to measure something in an experiment. In other words, the learner needs to externalise the meanings they construct, one way or another, in order for a teacher or someone else to evaluate those meanings against other ones (their own, or those held in a textbook for example).

This dual focus on observing and evaluating both linguistic responses and concrete actions is the key point of Pask’s conversation theory: both are seen as ways of externalising a learner’s understanding of the topic. In his words, understanding is “determined by two levels of agreement”. The teacher tells you or shows you how to measure the salinity of a chemical solution, then you try it yourself.

If you take away just one insight from this article, take the notion that meanings are made on these two levels — through descriptions and actions, and the relations between them. Learning can be seen in mutual observation and agreement about thoughts and discoveries. “How do you think salinity works?”

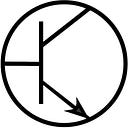

Conversational framework for learning, adapted from Laurillard (2002)

Getting constructive

In Pask’s conversational model, the difference between learner and teacher can become fuzzy. If you’ve ever taught, you know that the teacher can learn as much as students do. Education researchers spend a lot of time observing classroom situations, and how what is said and what is done moves in different directions and shifts over time.

I mentioned constructivism — the idea that we actively construct meanings instead of just passively internalising them. An extreme version of this is that we might think we see connections that might not actually be present. We might wrongly assume there are live creatures that determine the salinity of a chemical solution. An education researcher might assume the teacher holds all that chemistry knowledge in her head. A patriotic American might see connections between their elected officials and a rumoured child trafficking organisation based in a pizza restaurant.

A system could therefore be the construction of an observer through his or her descriptions of a situation. I discussed this in a previous article about cybernetics, the science of systems. The observer — whether the learner, the teacher, the researcher, a politician or a journalist — is never neutral. We all have biases, we all observe from a position that enables us to see some things and not others. We may or may not like or agree with what we see. By extension, we must share some responsibility for the meanings we make, and the ones we put back into the world.

Putting it into practice

During my PhD research, I worked for a while with Professor Diana Laurillard. She had written an influential book about rethinking university teaching, and as a result also served in the UK government making education policy.

While researching her book, Laurillard developed a model that, she told me, strikingly resembled Pask’s. “I believe he had an intuition about how learning works at a deep level,” she said. This is where Pask’s AI-influenced conversation theory gets translated for human learning.

I’ve redrawn Laurillard’s model above. I used circles to represent heads, and squares represent bodies. These conform roughly with shapes used in flow charts, but not directly: strictly speaking, circles are where a process starts and ends, and squares indicate parts in between. My reading is more fluid, but I wanted to allude to people for simplicity and directness: descriptions are formed by language, actions are created by the body. I filled these shapes because each can be opaque — descriptions and actions need to be externalised or made visible in order for someone else to perceive them.

Laurillard believed that Pask’s model could apply to topics beyond only those with formal relations, strict definitions and specific conditions. “There is a conceptualisation of what it means to be a good poet or artist,” she told me, “then there is the practice of that. You compare your practice w the model.”

Separating meaning making into a level of descriptions and a level of actions is useful even in a fairly passive classroom situation where students are not undertaking any direct actions beyond sitting and listening.

This she calls she calls “the second-order character of academic learning” where “actions” might only refer to past experience or thought experiments. That level of actions might represent how the teacher conceives of some part of the world, and she wants the learner’s representation to match it.

“Similarly,” Laurillard said, “the dialogue may never take place explicitly between teacher and student. It could be a purely internal dialogue with the student playing both roles.”

But all cases involve iterative dialogue, feedback and reflection. What is called “comprehension learning” involves declaring and describing things, while “operation” or procedural learning is about doing things. Both articulate meanings through dialogue or action. The level of actions is about using theory to do things in the world, and the level of descriptions is about reflecting on what you do and observe in the world.

Any fairly complex activity, therefore needs to take both into account. Using language or pure theory or abstraction, without context, the body, or time simply doesn’t exist, according to this useful article by Jordan and Henderson. It’s a spectrum, in which some things are accomplished more through talk (think meetings, police interrogations, interviews, lectures, stories), and some more through action (surgery, car repair, loading an airplane).

So meaning making takes on two levels — these are the horizontal levels of Laurillard’s model. But look also at the vertical levels — the two actors who take part in these activities.

The one on the left represents the teacher, and Laurillard’s other great insight, drawn from Pask, was to notice that not enough attention in education research had been paid to how teachers reflect, adapt, feed back, discuss and learn.

Looking at that model, one thing that becomes clear is that meaning making takes place between all the corners of the model: between the teacher’s and the learner’s representations of whatever topic, in the reflection that takes place within both teacher and learner, in the relations between their descriptions and actions. And as the double arrows indicate, this meaning making takes place in both directions — in other words, in feedback.

E-learning

Pask’s conversation theory had been pretty much forgotten until Laurillard picked it up around the year 2000. By that time, computers had shrunk considerably from being room-sized in Pask’s day, such that they were suddenly small enough to be sat on a desk or carried around.

And if computers could now be in the classroom, Laurillard saw a different role for them than Pask’s observer: they could help, or even replace, the teacher for some tasks. That means a computer could replace that left side of the diagram, or it could replace the bottom half — a computer model could represent some part of the world, and students could perform some actions with the machine and not only converse.

Laurillard’s conversational framework enables six different types of learning: acquisition (of facts, for example), inquiry (asking questions, undertaking investigations to answer them), practice (the teacher creates an environment with some learning goal), discussion (which can include shared practice), collaboration, and production. Which type of learning dominates a particular situation determines the nature of the feedback you get.

But I don’t want to get into more detail about these — for that you can read Laurillard’s book. I want to move on a deeper understanding of the framework, and how I’m now applying it to machine learning.

Levelling up

First a bit of background: after my PhD I taught in art schools for a decade or so. Doing versus explaining: this is why I love working with artists and designers. There’s a lot of knowledge that you can’t put into words, whether it’s emotions, aesthetics, spirituality, sensory perception, practical skills, intuition.

To me, the level of descriptions and the level of actions represent theory versus practice, communication versus interaction, information versus experience, essence versus existence, thinking with the mind versus with the eyes and hands, what people say versus what they do.

Going back to learning theory, I mentioned constructivism — the notion that we actively make meanings. Seymour Papert went a step further with what he called constructionism: making stuff in the world makes meanings inside our heads.

A level of descriptions might still be involved. In fact, it makes for powerful learning to engage in both making stuff and reflecting on it. This is why the practice-based PhDs I now supervise are split in half, requiring both a substantial piece of writing and a body of practice. The conversational framework has been useful for helping creative practitioners reflect on and articulate their practice, moving between theory and practice.

But something else occurred to me: If Laurillard was able to replace the teacher with a computer in her model, and the teacher is also a learner, then why couldn’t you also replace the learner with a computer? Going further, could you replace either of them with some other nonhuman entity?

Let’s head off talk about spiritual beings, or hippie stuff about “plant teachers”. If we stick with systems theories (let’s maintain some degree of rationality), these have always included — in fact started from — natural systems. Here’s leading cybernetician Ross Ashby, writing in 1948:

To some, the critical test of whether a machine is or is not a ‘brain’ would be whether it can or cannot ‘think.’ But to the biologist the brain is not a thinking machine, it is an acting machine; it gets information and then it does something about it.

There’s representation and then there’s performance. Ashby’s contemporary Stafford Beer thought that ecosystems are smarter than us — not in representation but performance. Charles Darwin’s theory of evolution indeed characterises adaptation over time as a form of learning.

You might say, then, that the level of descriptions — of representations, of language — are what separates humans from nature. But if you’ve read any of my other articles, you know that I don’t believe humans are separate from nature.

Instead, we have to ask, What is a language? In Pharmako AI, K Allado-McDowell and GPT-3 contend that natural forms are a language — “it’s nature thinking”, again echoing Darwin.

What’s more, when I shoved the conversational framework in front of my students, some of them said that actions, and not only words, can be considered a language.

Computers complicate the picture even further, because programming code is language that you “run” to perform actions. Also, “speech acts” in literary theory see all language as a form of action. Physiologically, of course, this is true, since we form words by moving our tongue and lips, even in our brains as the firing of neurons.

But instead of going down a rabbit hole of pedantics and semantics, let’s stick with the conversational framework as a useful construct. Counter-intuitively, I believe that separating descriptions and actions is useful because they can be indistinguishable. There is a whole range of probabilities between zero and one, for example (this is the basis for analog computing) but those two end points are useful poles to explore the spectrum of possibilities in between.

Learning by linking

So what about the computer as learner? Machine learning has been around in theory since Pask’s day (in fact he drew on the work of Papert). But it really took off when I disappeared into art school in the 2010s, and some of my students started exploring it as artistic practice, prompting me to look more closely at it (teacher as learner, remember?)

The thing about meaning making is that it is usually tied to specific contexts. That sculpture I saw in a museum reminded me of a toy I had as a child. Someone tells you about how evil insurance companies are, but this is situated within a discussion about conspiracy theories. When I think about the power of sound, I think of the film Earth vs. the Flying Saucers.

One way to represent meanings — ideas, concepts, memories — is therefore through connections between things, instead of as a single meaning or memory in a specific location. For example in the conversations we have — in those two-way arrows in the diagram.

This is how memories are stored in the brain — in the strength of connections (synapses) between neurons. The phrase “Neurons that fire together wire together” captures this.

Many artificial neural networks operate in a similar way, by storing data in little chunks then putting them together. For example, to identify something in an image, such a system might break the image down into little pieces, then look for contrasts that might correspond with the edges of an object in the image, then put those together and compare it with shapes it can already identify.

Patterns, then, are central to how both brains and computers work: by identifying patterns that are perceived on one hand, and by storing them in patterns of neurons on the other. The level of descriptions in the conversational framework, applied to machine learning, is about how some concept is represented. To communicate about it with a human requires using human language.

Asking & arguing

There is, of course, a long history of conversational AI. Eliza is a chatbot created in the 1960s that behaves like a psychoanalyst by simply repeating what you type, but in the form of a question. There’s no learning involved however, so it’s not technically AI. But it does pass the Turing Test — that famous thought experiment about whether a computer can trick a user into believing it’s human. (Apparently Eliza beats GPT-3.5 at the Turing Test.) “[T]he learning process,” Turing wrote, “may be regarded as a search for a form of behaviour which will satisfy the teacher.”

At the very least, this should make us question a lot of contemporary claims about AI. How much of it is just trickery that looks convincingly human? As any politician knows, if you want to convince someone of something, how you say it is at least as important as what you say. This is why debate, rhetoric and arguments are so central to politics.

It turns out that argumentation is a good form of learning. There is a whole branch of pedagogical theory devoted to getting students to evaluate contrasting ideas, forming their own, defending them with evidence, refuting counter-claims, answering open-ended questions, and re-stating arguments. It works not just for training politicians, but scientists too. I constantly bother my PhD students to construct and defend a good argument, backed up with evidence.

Computers can do this too. Because formal arguments (as in law, philosophy and science) are constructed in very specific ways, computers can model them to create convincing ones. This is happening in AI systems today.

On a fundamental level, an argument in computer programming is data, which is passed to a function or method. This is sort of related to human arguments, in that the latter take in information and reasoning in order to produce a desired outcome. Formal arguments rely on logic and, again, context. In programming, the particular function provides the context — the data might not make sense to other functions.

So beyond answering your questions by drawing on stored FAQ databases, computers can converse with you. They can ask you questions, help you learn things by providing information, maybe even change your views by forming persuasive arguments.

Communication breakdown

There’s one more way we learn from them: when things go wrong. When we interact with computers, we tend to assume that they “think” and communicate in similar ways, according to Jordan and Henderson. More and more, this is through conversational interfaces, whether typed or spoken.

But when there’s a mismatch between the meanings we make and the ones they do, confusion can result. As in life, these moments of breakdown are often where we learn — even if we merely learn how the computer interprets things differently than we do. “A successful repair constitutes learning on the part of the user,” report Jordan and Henderson, “if not in the part of the machine. This is one of the many ways human-machine interaction differs visibly and observably from communication between people.”

Therefore, it’s important to make models clear and explicit, and this includes both the mental models used by people, and the internal models used by computers. That’s what I am aiming to do in this article, and in the practical work that goes with it.

For example, in a recent book, Charles Duhigg details different kinds of conversations that people engage in. I’ve discussed questions and arguments, and he adds analytical conversations (What’s this really about?), emotional ones (How do we feel?) and identity-based ones (Who are we?). These don’t stand alone — we might switch between these different types during a single conversation. But he proposes that, in order to best connect, we should be engaged in the same kind of exchange at the same time.

Useful for humans, and also for computational interlocutors. Eliza shows that computers can engage our emotions, even when they have absolutely none themselves. They can know intimate details of our identities through all that data they collect from our online activity. Isn’t that worth a two-way conversation?

Feeding the system

Computationally, Laurillard’s level of actions refers to the outputs of a system at runtime. We can observe and feed back on these to improve the system’s performance. For example, artists working with AI might enter a text prompt and/or input one or more images or video, then wait for the system to produce something in response.

Artist Zach Lieberman works this way: “Constantly tweaking and probing, he engages in conversation with the machine, seeing what happens when a small amount of chaos is injected into the system.”

Approaches to machine learning such as active learning and interactive machine learningboth involve frequent feedback mechanisms, though they differ depending on whom and how feedback is solicited. A machine’s actions might also be represented in visualisations of what’s going on inside.

Of course it works in the other direction too: machines observe human actions in order to classify and recognise them, generally feeding back in the form of text.

I often work off of the screen, though, with alternative input and output devices, in what’s called physical computing. This is a way to get away from language altogether — but it’s still a conversation. My mentors in this, Dan O’Sullivan and Tom Igoe, writethat, ideally, this should be a polite conversation. This means that when one side speaks, the other listens, participants think before speaking, and take turns. But because computers can “think” so much quicker than humans, you often need to program the machine to slow down to a human time scale before responding.

Expertise v. equality

If a machine can be a learner, does the human in a conversational system then become the teacher? If so, just who is the teacher here — the programmer, the person or people who provide training data, or an “expert” in some knowledge domain?

Domain expertise implies an unequal relation between teacher and learner, and the word “expertise” shares its root with “experience”. One theory of human learning is that someone doesn’t become an expert by constructing some complex mental model, but simply through experiencing many examples. For example, grandmasters in chess have seen so many board configurations that they intuitively know the right move for a given situation [source].

This view informed an earlier version of AI called “expert systems”. The idea was to input everything we know about some specific domain, so that the computer would have all the answers.

One problem with that approach is that no domain of knowledge is static — someone would have to continually add to and revise what’s inside. This is partly why machine learning has replaced this approach.

The other issue is about the nature of expertise. Something like a teacher-student relationship I described as unequal. But if knowledge is constantly being revised (think of how history is continually being rewritten based on our changing understandings and values), more knowledge might not necessarily be better.

If instead we look at the relations between the participants in a conversation as more or less equal — no matter who they are or what they’re talking about — then we’re simply dealing with different perspectives. Remembering that all learners actively (and interactively) construct their own meanings, conversations remain a way of negotiating some shared understanding.

That’s why I think this represents a new approach to machine learning. Current models typically conceive of learning as the training of an individual machine by one or more humans, whether through programming, through training data, or with an individual user.

Gordon Pask and Diana Laurillard located learning not in one side of that diagram, nor in either descriptions or actions, but in the system s a whole, with conversation as the mechanism through which it takes place.