Learning from Machines: Conversations with Artists about Machine Learning

This article uses a conversational model grounded in cybernetics to frame a critical discussion about machine learning, by dissecting the terms ‘machine’ and ‘learning’ through conversations with artists using the technology. Their creative and critical perspectives on practice, combined with relevant insights from theory, raise questions about the limits of language, the importance of actions and aesthetics, and the humanist assumptions of machine learning, signalling a broader epistemological shift toward experience, pattern recognition, relations, and acceptance of the unpredictable and ineffable. The boundary and agency between human and machine is shown to be fluid, and a social rather than cognitive conceptualisation of learning exposes ethical and ontological rifts, the understanding and bridging of which may transcend human or mathematical languages, where ‘recognition’ implies understanding as well as acknowledgement of existence, and ‘experience’ implies something to learn from but also a form of nonlinguistic understanding, as well as something that can be created for others.

Introduction

Machine learning (ML) is said to be changing the nature of what it means to be human [2], but the human is simultaneously being decentred [3]. According to Matteo Pasquinelli, any machine is a machine of cognition, and such machines enable new forms of logic [4]. Conversely, Dan McQuillan regards AI as lacking cognition in a human sense: “It is simply savant at scale, a narrow and limited form of ‘intelligence’ that only provides intelligence in the military sense, that is, targeting.” [5] Should we question machine learning as a technology of control, or embrace its new forms of meaning making?

This article approaches this by dissecting ML’s two terms, ‘machine’ and ‘learning.’ To focus the investigation, an epistemological model of communication is used to frame a critical discussion about ML. Approaches to ML are generally based on cognitive models, yet research on human learning has moved on, with many approaches now grounded in social models of learning such as activity theory [8] and constructionism [9]. This essay uses a framework based on conversation theory of Gordon Pask [10] as the basis for conversations about ML with artists who are using it. Specifically, I held conversations with David Benqué, whose research-driven practice focuses on diagrams, prediction and speculation; Jesse Cahn-Thompson, who creates artworks using social media data to materialise the intangible; Erik Lintunen, an award-winning artist producing commissioned and collaborative artwork with elements of theatre and a strong ethical sensibility; and Anna Ridler, who exhibits work internationally and uses self-generated datasets. I also held a conversation with Professor Diana Laurillard, who adapted Pask’s theory as a conversational framework for human learning. The results are combined with relevant theory to contribute to a critical approach to ML.

The Trouble with Learning

Machine learning typically conceives of learning as the training of an individual learner. It can be considered ‘social’ in that the learner typically receives a set of training data from some external source, the data being labeled by a human (in supervised learning) or not (in unsupervised). The learner may make inductive inferences or receive reinforcement from an external source such as a domain expert or via crowdsourcing. Feedback may come from the programmer or system designer, from crowdsourcing, or as part of this training process. But ML is not generally seen as an inherently social process. Active learning [13] and interactive ML [14] involve frequent feedback mechanisms, though they differ depending how and from whom feedback is solicited [15]. Both may involve co-learning — such a ‘human-centred’ approach conceives of ML as augmentation, not automation [13], with learning seen to apply to the human as well as the machine in the system.

Posthumanist theorists Katherine Hayles [24] and Donna Haraway [25] have shown that the binary distinction between human and machine is already meaningless. Systems thinking grew in influence with the development of computers after World War II, focused on goals at different scales and different types of biological, social and technical systems. Cybernetics views systems as achieving goals through iterative feedback. Within this context, Pask drew on developments in early AI, and came to regard conversation as central to learning, by humans and machines. Evidence of learning, in his theory, is located in language-based (spoken or written) responses of the learner, and from observing the learner’s use of a device or in some other actions. Both descriptions and actions were seen as ways of externalising understanding of a topic.

In Pask’s theory, there is no clear distinction between learner and teacher, and in fact the whole system (learner, teacher, apparatus) can also be observed by a third party such as a separate experimenter or another learner. A system could indeed be seen as the construction of an observer through his or her descriptions [26]. The difficulty of separating an observer from an observed system led to second-order cybernetics, which situates the observer as part of the system being observed. Implied in this is that “anything said is said by an observer”, highlighting the inherent subjectivity of participants and observers, and raising an ethical dimension when observers observe themselves and their own purpose and position [28]. Due to the role of emotion in human judgment, we must ask whether we like what we observe and create, taking some responsibility for it. According to Pask, what is produced is not just the product but the system of relations which includes humans [26].

The link between AI and art dates back to Pask, who created conversational, interactive installations in the 1970s alongside his scientific work. ML systems such as artificial neural networks (discussed below) are much more complex than the the cybernetic systems originally conceived. But posing learning as a conversation, with constant feedback, at least locates learning as a social process between two or more actors, both of whom may engage in learning.

Laurillard [30] drew on Pask’s theory to develop a framework for human learning. Goal-directed teaching strategies were refined into a set of requirements for a learning situation, which includes iterative dialogue, feedback and reflection, and the separation of learning into levels of descriptions and actions. ‘Descriptions’ here refer to goal-oriented instructions and feedback through language, and ‘actions’ as observable outputs or behaviours which are then adapted iteratively based on feedback. The framework is depicted in Figure 1.

These two levels can be conceptualised alternatively as theory and practice, or information and experience — though the two levels were not so clearly separated in my discussions with artists. The framework’s level of descriptions nonetheless exposes the role of language (both human and machine) in framing the goals and assumptions in a ML system, and the level of actions frames resulting human and machine behaviours, which may in turn be understood by each participant in terms of language.

Laurillard’s framework has been applied to learning design [31] — the design of systems and materials for human learning. Adapted for e-learning, a computer was seen to take the place of the teacher for some learning designs. ML opens the possibility for a computational system to take the place of the student, not the teacher. Pask indeed imagined that either or both of the two participants in a conversational system could be human or nonhuman; as a starting point for my discussions with artists I simply proposed replacing the student in the conversational framework with a machine.

What is ‘Learning’ in Machine Learning?

The conceptualisation of ‘“’learning machines‘”’ by Pask was based on work done in the 1950s on early cybernetic devices such as the ‘perceptron’ [34]. Cybernetics locates learning in systems, whether human or nonhuman and operating at different scales. Snaza et al [12] point out that the ‘cybernetic triangle’ of human/animal/machine is made up of fabricated borders.

The ‘learner’ in ML can simply refer to an algorithm. Adrian Mackenzie cites the following definition: “A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, improves with experience E” [36]

Experience as a source of learning is enacted specifically in a back-propagation algorithm, which reverses the traditional order of computational operations, enabling the system to fine-tune its functioning by feeding information backwards to correct errors once a program finishes running. This echoes the philosopher Søren Kierkegaard, speaking about humans: “We live forward, but we understand backwards.” [37]

An alternative definition of learning in ML cited by Mackenzie is: “learning is a problem of function estimation on the basis of empirical data” [38] where a function is a mathematical expression for approximation or mapping of one set of values onto another, or onto variables [39]. Learning here is understood as finding, with experience defined purely on the basis on time [38].

The conversational framework I have described equates experience only with the level of actions, not that of language, on the assumption that some actions cannot be described with words and thus provide an alternative source of information or understanding. Conversely, ‘reality,’ according to McQuillan, is not only data recorded by devices; rather what we sense is already ascribed meaning — not just seeing but “a pattern of cognition” [5]. As the organising idea behind ML, data science therefore “does not simply reorganise facts but transforms them” [5].

Furthermore, ML is not only characterised in terms of the past via the description and classification of existing data, but also prediction, and this links to positivist thinking that equates scientific value with predictive laws [5]. While classification is “perhaps the key operational achievement of machine learning and certainly the catalyst of many applications,” [38}, the simplest definition of learning is from experience, as above. While most computers contain ‘memory’ in the form of stored data, ML is a set of one or more algorithms that makes statistical inferences from this data. A learning algorithm can therefore not only classify data but create other algorithms to be run [40]. A ML system such as an artificial neural network thus redraws human-machine boundaries according to Mackenzie, through through the feeding-forward of potentials, and the backward propagation of differences [41].

This process links with the level of actions in the conversational framework. Things in the world are vectorised to make a dataset, which is then used to train a ML system such as a neural net, which in turn applies probabilities to classify subsequent data, “wherever something seen implies something to do” [42].

If machines merely reproduce patterns implicit in the structure of a dataset, can this really be called learning in a human sense? Does such a definition of learning anthropomorphise mere statistical modelling? The learning done in machine learning, according to Mackenzie, has few cognitive or symbolic underpinnings [44]. He suggests “it might be better understood as an experimental achievement” [45]. Pasquinelli concurs that AI more broadly is “a sophisticated form of pattern recognition, if intelligence is understood as the discovery and invention of new rules. To be precise in terms of logic, what neural networks calculate is a form of statistical induction” [4].

Interestingly however, contemporary neuroscience similarly conceptualises human cognition as pattern completion from semantic units of information as dimensions in a multidimensional network [46]. This suggests the modelling of cognition is not simply one way, from neuroscience to ML, and indeed ML is conversely used to interpret neurological data, given volume and complexity of the latter.

What happens when we instead apply a social model to ML? If as Mackenzie states, learning is located in a relation between machine and human, what happens when artists meet such pattern recognition, in creative and critical practice?

In our conversation, Anna Ridler tentatively defined learning as “absorbing new things,” but pointed out that we cannot predict what will be remembered by humans. She highlighted the importance of memory — language both strengthens and weakens memory, as speaking solidifies but also changes a particular memory. Memory and decay were the focus of Ridler’s 2017 film Fall of the House of Usher, for which she produced several hand-drawn datasets based on frames from the 1928 version of the film, and processed them through a ML system called a Generative Adversarial Network. This type of system involves two neural networks communicating with each other, not as conversation or collaboration but in competition, with one attempting to ‘fool’ the other. In Ridler’s work this manifested in a progressive decay in the imagery as information was lost through each iteration of ‘learning’ by the system. (See Figure 2.)

Where is the Machine?

If a different form of learning arises when the statistical inferences of, say, a neural net, meet human language and subjectivities, what and where is the machine in ML, given the proliferation of methods and devices?

Ridler observed that even depicting the machine as a singular learner in the conversational framework is problematic. Algorithms, Graphics Processing Units (GPUs), software and hardware all represent different parts of the process, with their own internal communication. She compared her use of the GPU to different kinds of paint. She uses two, and maintains control over them, insisting that ML is not a black box. It’s a process, she says, not a tool, and not a linear process at that.

David Benqué pointed out in our conversation that even an algorithm isn’t a single thing, depending on one’s perspective. He is bothered by those who regard machines as co-creators, because this already contains an assumption of independent consciousness. “If anything they’re hyper-human,” he said, with “human values and assumptions baked into mathematical space.” Asked whether he viewed the machine as a student, as in the conversational framework, he replied, “It’s more like having a thousand students, and you statistically select the best one.”

Benqué’s Monistic Almanac [55] was designed to make the human assumptions explicit, taking the historical form of the farmer’s almanac with its mix of superstition and science, and using ML to combine seemingly unlikely datasets such as commodity prices and planetary positions. Learning for him, as with Mackenzie, is a relation — the act of “baking” these assumptions into an algorithmic model reifies this relation. With the absurd combinations of datasets he used, “the scores for cross-validation are terrible,” he said. “But the predictions still work!” (See Figure 3.)

Lintunen, in our conversation, located the machine in ML as the model — a system comprised of both hardware and software, but importantly devised and constructed for a specific learning purpose. As Mackenzie states, the learner in ML is a model that predicts outcomes [57]. One of Lintunen’s projects converts a melody played or sung into a browser-based interface into MIDI data, which a neural net then uses to improvise in the musical form of call-and-response. Learning, for him, is in patterns, similarities, differences, and recognition. (See Figure 4.)

For Lintunen, in the machine as model, an object is represented by information that simply gets passed on to the next algorithm, node, or network layer. “The system isn’t learning anything really,” he said. But transforming information into action can lead to creative outcomes; he pointed to the unpredictable (by humans) moves taken by the ML system AlphaGo in defeating its human competitor in the game of Go.

As a final perspective on the machine in ML, Jesse Cahn-Thompson similarly told me, “It’s not one thing.” This was the third time he worked with Watson, IBM’s ML system that uses human language to respond to queries (most notably winning a $1 million first prize on the US TV quiz show Jeopardy against human opponents).

Simply stating that Cahn-Thompson “worked with Watson” evokes several human associations, and assumptions. Yet he didn’t ascribe any human qualities to it: “It’s more like a spreadsheet,” he said, “a container of information that it’s constantly acting on.” Benqué discusses something similar: the “accountant’s view of the world” — for him, lists of tables in historical almanacs, which Adrienne Lafrance describes as precursors to the information age [60]. This is the universe as learning machine, its contemporary incarnation echoing previous clockwork and steam-powered analogies, each driven by the dominant technology of its age [61].

Cahn-Thompson’s view of the machine contrasts with popular accounts that ascribe sentience to machines. Deprived of even a child’s natural instincts, Watson’s “profound ignorance” stems from a lack of senses, genes, and its own intent; he described is as a “container of experience,” echoing the purely mathematical definition cited by Mackenzie.

Cahn-Thompson also, however, described it as a ‘witness,’ with a deliberately religious connotation: his intention was to embody this intangible entity, and he did so with the body of Christ, by feeding the Gospels to Watson as a series of tweets, then subjecting Jesus to consumer sentiment analysis using a tool provided by Watson. (The same tool was used infamously by Cambridge Analytica.) He then used the results to produce an altar with relevant religious artefacts and rituals — for example he translated the data into a hymn which was then sung by a human choir. (See Figure 4.)

While Ridler’s work was primarily with images, Benqué’s with tables and diagrams, and Lintunen’s with music, Cahn-Thompson’s use of natural language was closest to the traditional definition of a conversation. Interacting with Watson in this way, he said, was “like an online relationship with somebody you never met, that you’re just chatting with. You’re giving information to this individual, and you’re learning more about each other.”

Learning as Conversation

In Laurillard’s conversational framework, as for Pask before her, shared understanding is the goal, and conversation is the means to achieve it. Specifically, the teacher has (or builds) a model which is compared with a learner’s. “They’ve got to reach agreement,” she told me, “or generate a better thing together than they would separately.”

Ridler described her working process as more complex and nonlinear, as she works on a local machine with two processors simultaneously. “I’m conscious that I’m instructing,” she said, “but there’s not a conversation in the traditional sense.” The terminal might throw up an error, for example, which she would then need to fix. This is command on the part of the machine, not conversation — indeed, a conversation stopper.

Benqué, when asked whether he saw his interactions as a conversation, said, “yes, but it’s more of a negotiation with the material, and with a goal in mind. Maybe it is a conversation,” he mused, “because you get things that work and don’t work, without fully understanding.” Building a ML model inevitably proceeds from the human creator’s model of some aspect of reality, but as noted by all the artists, learning applies to the human as well — for Benqué, “learning to think like a machine learner.”

Lintunen agreed that his process was like conversation. He has an idea what the output might be, but learns from the system about the data and the algorithms, he said. The system in turn learns, in his case the structure of MIDI files, about pitches, durations, etc.

What emerged collectively from my discussions was that conversation could be a useful way of thinking about a human-machine learning assemblage, but at least in ML as artistic practice, it is not useful to think about a human teacher and machine learner politely taking turns to reach a shared understanding or achieve a common goal; shared understanding might be better viewed as a form of control when a model is fitted to a dataset — no matter how disparate the datasets, fit can be forced.

The Level of Descriptions

The top level of Laurillard’s conversational framework is the Level of Descriptions, in which teacher and learner articulate their conceptions with an aim to come to agreement through iterative evaluation and feedback.

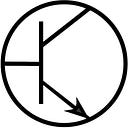

At its most obvious and basic level, language in ML is programming language, consisting of mathematical functions for manipulating numbers and data. In ML this manifests as specific algorithms or libraries of code. Data, as input or output, takes many forms, at a base level translated into binary machine code of ones and zeroes, or in material terms, a switching on and off of transistors.

But “code alone cannot fully diagram how machine learners make programs or how they combine knowledge with data,” according to Mackenzie [66]. The binarisation of the world, ‘baked into’ a ML system (in Benqué’s words), is division of things in a dataset, based on difference. Machine learners are often simply called ‘classifiers,’ their categories “often simply an existing set of classifications assumed or derived from institutionalized or accepted knowledges [67].

Looking even deeper, in ML systems a chunk of data such as human-readable text or an image file is stored as vectors in a multidimensional graph, and in this high-dimensional “latent” or “ambient” space, is “mixed together and surrounded by noisy, meaningless nonsense” [68]. In analysing data for human use, it extracts features from the dataset, “reducing the number of random variables, known as dimensionality reduction” [69]. Artificial neural networks additionally back-propagate errors in “hidden” layers which are not directly observed by humans, their operation sometimes not understood even by their programmers, leading to popular fears of machine sentience [70]. The resulting ‘insight through opacity’ drives the observable problems of algorithmic discrimination and the evasion of due process. [5]

Benqué harnesses the 1884 story Flatland: A Romance of Many Dimensions by Edwin Abbott as an analogy for this opacity [71]. In the story, a square is visited by a sphere who takes him up a dimension to Spaceland, then down to Lineland, to gain new perspectives. Here, dimensionality reduction is condescension, and despite the story’s inherent gender and class stereotypes, it reveals in ML a neoplatonist assumption of a “hidden layer of reality which is ontologically superior, expressed mathematically and apprehended by going against direct experience” [5].

Ridler, acknowledging the use of different types of language with machines, similarly characterises all machine language as incantation — language that does something, not only describes something. Acknowledging too the humanist assumptions around ML, she notes a “dissonance when you apply human logic onto machine logic.”

The division and classification of data undertaken by ML, not only as discourse but as operation, blurs the distinction between the Level of Descriptions and the Level of Actions in Laurillard’s conversational framework, opening up the potential to speak of a language of actions.

The Level of Actions

We have seen how language can prompt action in ML — most obviously in programming code as a set of instructions, but conversely when the computer encounters an error and prompts (or instructs) the human operator to address it before continuing.

Moving in the upward direction in the conversational framework, we can speak of a language of actions, in the sense that actions can be learned, taught, inscribed with meaning and classified. Actions are necessarily turned into (machine) language for the computer in ML, as for example Ridler’s hand-drawn datasets, the act of singing in Lintunen’s work, even planetary motions in Benqué’s.

But as philosopher Karen Barad argues, “language has been granted too much power,” noting that “every ‘thing’ — even materiality — is turned into a matter of language or some other form of cultural representation” [73]. Language is famously efficient for communication, as Laurillard reminded me. “But it’s not enough,” she said. “You can’t learn just by being told.”

In fact, in certain domains, she added, language is practice — for example argumentation. “Actions are how you interpret and make decisions,” she told me, “and then you create output in the world, then you get feedback, which would enable you to learn through practice.” She gave up on AI, however, because it seemed to stop at the Level of Descriptions: “I can see how sensory feedback can be modelled, but what do you do about emotion and preference and experience and memory? The same fact is interpreted differently depending on where it appears in a particular context.”

The first ML system, the perceptron [34], used a series of photocells to observe lights turning on or off to represent binary states. Pasquinelli traces the genealogy of the algorithm further back, finding its roots in material practices and mundane divisions of space, time, labor, and social relations. “Ritual procedures, social routines, and the organization of space and time are the source of algorithms, and in this sense they existed even before the rise of complex cultural systems such as mythology, religion, and especially language” [74].

Can the presence or absence of something be considered the most rudimentary form of ‘language,’ predating humans? This is at the opposite end of the incomprehensible hypercomplexity inherent in multidimensional arrays of data, and both co-exist in ML systems. Cybernetics and other types of systems thinking, from Weiner’s binary ‘decisions’ to claims of the algorithmic nature of reality [75] place such a language beyond humans, and humanism. Can anything elude capture and control by some form of language?

It is useful to look at communication between artists and machines that do not take explicitly linguistic form. What happens when outputs are represented nonlinguistically to humans, and subjected to human pattern recognition? As noted, ML results are almost always evaluated by humans.

Ridler told me she writes scripts to generate visual feedback, and said “there’s more of a conversation here, in an artistic sense.” She is clear that it is not a collaboration, as she always maintains aesthetic control in selecting from thousands of drawings produced by the machine. She deliberately avoids labelling the outputs, instead printing and sorting by hand. As such, much of her work resides in documentation of the process, not just in final products.

Benqué worked similarly, though more screen-based: diagrams for him are the interface. He means this literally — the core of ML mathematics is about vectors and diagrams. “I would argue,” he said, “that the code is a diagram as well. And so is language.” I add here that Laurillard’s conversational framework is also a diagram — one which embodies certain relations founded in formal education. Mackenzie, following Charles S. Pierce, similarly sees ML “as a diagrammatic practice in which different semiotic forms — lines, numbers, symbols, operators, patches of color, words, images, marks such as dots, crosses, ticks, and arrowheads — are constantly connected, substituted, embedded, or created from existing diagrams” [78].

Cahn-Thompson, though he worked with linguistic input, received a diagram as output: a sunburst chart depicting consumer personality traits. Complete with an avatar pictured in the centre, he was struck by its resemblance to the Catholic monstrance, which he duly produced in physical form, based on the Gospels of Jesus. He deliberately aimed for ineffable and emotional qualities inherent in religious iconography.

This section concludes by noting that both human and machine participants in artistic ML can communicate in nonlinguistic ways not completely comprehensible to one or the other, whether binary machine code or multidimensional diagrams on the one hand, emotionally-laden imagery or iconography on the other. This raises the vital role of observation.

Observation

Observation is central to cybernetics, with an observer situated either outside or as part of a system. By extension in Pask’s conversation theory, conversants observe each other’s actions, and there may be an additional observer. Central to the humanist notion of objectivity is empirical observation; Mcquillan calls this “onlooker consciousness,” wherein a supposedly outside observer not only observes but manipulates an experimental condition [5]. Quantum theory, as described by Barad [79], and second-order cybernetics both support this, situating the observer as part of the system being observed.

By extension, every observer is partial — both in the sense of having a partial view, and in having a viewpoint or opinion. This is the ethical position discussed previously. Similarly for machines: ML “makes statements through operations that treat functions as partial observers,” according to Mackenzie. Every ‘witness,’ to use Cahn-Thompson’s chosen term, can only give partial testimony.

What is the ethical position of a machine as observer? Is such a question even valid or answerable? The extent to which we can say a machine observer ‘likes’ what it observes exposes the flaw in separating it from the human — as in second-order cybernetics, it cannot be separated from the system it observes.

We can locate its ontological and epistemological position in language. As discussed, machine language is different from human language, as a language of functions and operations. This process is conflated however, as operational functions transform data, and observational functions render the effects of those transformations visible in statistical or diagrammatic forms [81]. Such forms may then be attributed ineffable or otherwise human qualities by their human observers. Here we may link back to Neoplatonism and its notion of ideal forms: in machinic terms, perfect geometric forms described mathematically, but in human terms, aesthetically, and as such, subjectively. We could say that these forms are as hidden or inaccessible to machinic observers as high-dimensional vector spaces are to human ones.

Machine Learning as Art, not Science

Ridler is concerned that most of the research being done on ML assumes access to many more processors than the two she relies on. “It’s starting to lock out experimentation from people who are not affiliated with [big companies],” she said. Mackenzie notes that many of the brightest computer scientists have been incentivised to work on ML algorithms for optimisation of online advertising. Cahn-Thompson pointed out that writing code is often abstracted from its end use. Here, then, is another hidden layer, that of commercial exploitation. According to Mcquillan, “The opacity of machine learning is not only that of the black box. It is also a consequence of algorithms hidden behind the high walls of commercial secrecy“ [5].

As an artist, Cahn-Thompson used the Twitter API because it was free, and used only the free services provided by Watson. The machine in ML, he said, “is controlled by those with access to it.” He thought of his ‘student’ in the conversational framework not in terms of agency, but its ability to be manipulated. ML as a ‘container of experience,’ as Cahn-Thompson described it, defines us — as consumers, programmers, humans. In exhibiting his work, he found viewers surprisingly unconcerned about this aspect. In this sense he learned more about humans than about the machine, he said.

Conclusion

Is the ‘machine’ all of us? “The way out of a machinic metaphysics that eludes accountability,” according to Mcquillan, “is to find a form of operating that takes embodied responsibility. Moreover, this embodiment should start at the ‘edges’.” He draws from Barad in suggesting that a counterculture of data science “can be a critique that also becomes its own practice” [5], Based on my conversations, recasting ML as a critical pedagogy means exposing hidden layers, but also accepting the ways of understanding particular to every participant, while maintaining a degree of control and an ethical position.

If a machinic understanding is insufficient for comprehending emotion or aesthetics, here is a place for artists. This doesn’t mean we should rely on machines solely for brute-force calculation, but embrace that they enact a different form of creativity. Machines are already making art, Cahn-Thompson pointed out, however art is defined. Lintunen pointed to the creativity of AlphaGo, and Ridler mentioned physicists working with DeepMind in order to be pushed out of their comfort zone, which have already led to new discoveries [86].

Taking a posthumanist stance means discarding a binary separation between us and them, accepting that machines are what make us human, and humans are machines as much as we are animals — it’s a continuity. Object-oriented ontology goes even further to focus on relations between all things as independent of pre-formed identities. Again this requires “an emergent ethics and/or accountability for what is produced“ [89]. Cybernetics shows one way forward, but remains historically contingent.

Returning to the conversational framework, this means collapsing the distinctions between teacher and learner, description and action. An extended version of the framework [91] adds a third actor — another learner, who engages in peer learning with the first. In the context of artistic practice, we can regard this additional actor as the audience. Here we return to the role of experience — as a time-based container that unifies descriptions and actions, but also as something we can design for others, with the ethical responsibilities that come along with such an act. ML shows how we can learn from our errors and feed forward. But we should accept that our conversations may sometimes be free of language.

References

[1] Erik Lintunen, Latent Spaces: Construction of Meaning and Knowledge through New Media, Art, and Artificial Neural Networks, p.10. (MA dissertation, Royal College of Art, 2019)

[2] Louise Amoore, “On Intuition: Machine Learning and Posthuman Ethics,” Keynote speech at Digital <Dis>Orders — 7th Annual Graduate Conference Cluster of Excellence “The Formation of Normative Orders,” 17 Nov 2016.

[3] Cary Wolfe, What is Posthumanism? (Univ. of Minnesota Press, 2009)

[4] Matteo Pasquinelli, “Machines that Morph Logic: Neural Networks and the Distorted Automation of Intelligence as Statistical Inference,” Glass Bead, 1 ((2017). Available at: http://www.glass-bead.org/article/machines-that-morph-logic/?lang=enview

[5] Dan McQuillan, “Data Science as Machinic Neoplatonism,” Philosophy and Technology, 3(1) (2017), pp. 253–272. doi: 10.1007/s13347–017–0273–3.

[6] Adrian Mackenzie, Machine Learners: Archaeology of a Data Practice (MIT Press, 2017)

[7] Pedro Domingos, The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our World (Basic Books, 2015)

[8] Victor Kaptelinin and Bonnie Nardi, Acting with Technology: Activity Theory and Interaction Design (MIT Press, 2006)

[9] Seymour Papert, Mindstorms: Children, Computers and Powerful Ideas (Basic Books, 1980)

[10] Gordon Pask, “Conversational Techniques in the Study and Practice of Education.” Br. J. educ. Psychol., 46 (1976), 12–25.

[11] Hugh Dubberly and Paul Pangaro, “Cybernetics and Design: Conversations for Action,” in Design Cybernetics, Fischer & Herr (eds.). (Springer Design Research Foundation Series, 2019)

[12] Nathan Snaza, Peter Appelbaum, Siân Bayne, Marla Morris, Nikki Rotas, Jennifer Sandlin, Jason Wallin, Dennis Carlson, John Weaver, “Toward a Posthumanist Education,” Journal of Curriculum Theorizing 30(2) (2014), 39–55.

[13] Josh Lovejoy and Jess Holbrook, “Human-Centered Machine Learning,” Medium Jul 10, 2017.

[14] Mark B. Cartwright, “The Moving Target in Creative Interactive Machine Learning,” Proc. CHI EA, 2016.

[15] Marco Gillies, Rebecca Fiebrink, Atau Tanaka, et al, “Human-Centred Machine learning,” Proc. CHI EA, 2016.

[16] Paolo Friere, Pedagogy of the Oppressed (New York: Continuum Books, 2005)

[17] Diana Laurillard, Teaching as a Design Science: Building Pedagogical Patterns for Learning and Technology, p. 93 (Routledge, 2012)

[18] Lev Vygotsky, Mind in Society (Harvard Univ Press, 1930)

[19] John Dewey, Art as Experience (Perigee Press, 2009/1934)

[20] Snaza et al, “Toward a Posthumanist Education,” p. 50.

[21] Evelyn Fox Keller, Making Sense of Life: Explaining Biological Development with Models, Metaphors, and Machines (Harvard University Press, 2009)

[22] Eleanor Dare, “Out of the humanist matrix: Learning taxonomies beyond Bloom,” Spark: UAL Creative Teaching and Learning Journal 3(1) (2018) https://sparkjournal.arts.ac.uk/index.php/spark/article/view/79

[23] Dare, “Out of the humanist matrix.” While I agree with Dare’s general argument, I have a different understanding of Vygotsky, whose approach to learning is typically characterised as social constructivism, wherein meaning is made in the world not in the head; and Papert’s constructionism, not constructivism as she says, the latter associated with Piaget and the former an adaptation thereof, wherein the construction of things in the world is said to foster mental construction.

[24] N. Katherine Hayles, How We Became Posthuman: Virtual Bodies in Cybernetics, Literature, and Informatics (Univ of Chigaco Press, 1999)

[25] Donna Haraway, “A Cyborg Manifesto: Science, Technology, and Socialist-Feminism in the Late Twentieth Century,” in Simians, Cyborgs and Women: The Reinvention of Nature (New York; Routledge, 1991), pp.149–181.

[26] Hugh Dubberly and Paul Pangaro, “How cybernetics connects computing, counterculture, and design,” in Blauvelt. A. (Ed.) Hippie Modernism:The Struggle for Utopia (Walker Art Center, 2015)

[27] “Cybernetics Revisited,” Leonardo Electronic Almanac 22(2).

[28] Humberto Maturana, “Metadesign,” (paper presented at Institutuo deTerapia Cognitiva (INTECO), Santiago, Chile, August 1, 1997), http://www. inteco.cl/articulos/006/texto_ing.htm

[29] Stephen Wilson, “Computer Art: Artificial Intelligence and the Arts”, Leonardo, 16.1 (1983), pp. 15–20.

[30] Diana Laurillard, Rethinking University Teaching: A Conversational Framework for the Effective Use of Learning Technologies (Routledge, 2002)

[31] Kevin Walker, Diana Laurillard, Tom Boyle, Claire Bradley, Tim Neumann and Darren Pearce, “Introducing theory to practice in pedagogical planning,” CAL’07 conference, 2007.

[32] Snaza et al, “Toward a Posthumanist Education,” p. 47

[33] Mackenzie, Machine Learners, p. 182

[34] Frank Rosenblatt, “The Perceptron: A Probabilistic Model for Information Storage and Organization in the Brain,” Psychological Review 65(6) (1958), pp. 386–408. doi:10.1037/h0042519.

[35] Leah Kelly, “Self of Sense,” in Jones, Uchill and Mather (Eds.) Experience: Culture, Cognition and the Common Sense (MIT Press, 2016)

[36] Mackenzie, Machine Learners, p. 1

[37] Søren Kierkegaard, Journalen JJ:167 (1843)

[38] Mackenzie, Machine Learners, p. 82

[39] Mackenzie, Machine Learners, p. 80

[40] Domingos, The Master Algorithm, p. 6

[41] Mackenzie, Machine Learners, p. 182

[42] Mackenzie, Machine Learners, p. 4–5

[43] Mackenzie, Machine Learners, p. 100

[44] Mackenzie, Machine Learners, p. 27

[45] Mackenzie, Machine Learners, p. 84

[46] Sasa L. Kivisaari, Marijn van Vliet, Annika Hultén, Tiina Lindh-Knuutila, Ali

Faisal, and Riitta Salmelin, “Reconstructing Meaning from Bits of Information”, Nature Communications 10 (2019) https://www.nature.com/articles/s41467-019-08848-0.pdf

[47] Huyghe, Pierre. “Pierre Huyghe in Conversation with Hans Urich Obrist” (video), Serpentine Gallery, London 3 Oct 2018, https://www.serpentinegalleries.org/exhibitions-events/pierre-huyghe-uumwelt

[48] Shen Guohua, Dwivedi Kshitij, Majima Kei, Horikawa Tomoyasu and Kamitani Yukiyasu, “End-to-End Deep Image Reconstruction From Human Brain Activity,” Frontiers in Computational Neuroscience 13 (2019) DOI=10.3389/fncom.2019.00021

[49] Pierre Huyghe, “Introduction to the Exhibition,” Uumwelt Exhibition Guide, Serpentine Gallery, London (2018) https://www.serpentinegalleries.org/files/downloads/1615.serp_pierre_huyghe_guide_final.pdf

[50] Lintunen, Latent Spaces, p. 36

[51] Lintunen, Latent Spaces, p. 44

[52] Domingos, The Master Algorithm, p. 81

[53] Mackenzie, Machine Learners, p. 49

[54] Mackenzie, Machine Learners, p. 212

[55] David Benqué, “Cosmic Spreadsheets,” in Voss, G. (Ed.) Supra Systems pp. 59–76. (Univ. of the Arts London, 2018)

[56] Snaza et al, “Toward a Posthumanist Education,” p. 47

[57] Mackenzie, Machine Learners, p. 46

[58] Lola Conte, Avery Chen, Eva Ibáñez, Georgia Hablützel and Amy Glover (Eds.), “Recognition,” AArchitecture 37 (2019). (Architectural Association, London)

[59] John Fass and Alistair McClymont, “Of Machines Learning to See Lemon,” in Voss, G. (Ed.) Supra Systems pp. 54–58. (Univ. of the Arts London, 2018)

[60] Benqué, “Cosmic Spreadsheets,” p. 69

[61] Seth Lloyd, “The computational universe,” in Davies, P. and Gregersen, N. (Eds.) Information and the Nature of Reality (Cambridge Univ. Press, 2010).; Walter Van de Velde, “The World as Computer,” Proceedings of the Smart Objects Conference, Grenoble, 2003.

[62] Chris Crawford, The Art of Interactive Design: A Euphonious and Illuminating Guide to Building Successful Software (No Starch Press, 2002)

[63] Tom Igoe and Dan O’Sullivan, Physical Computing: Sensing and Controlling the Physical World with Computers, Ch. 8. (Thompson Course Technology, 2004)

[64] Mackenzie, Machine Learners, p. 46

[65] Pasquinelli, “Machines that Morph Logic”

[66] Mackenzie, Machine Learners, p. 210

[67] Mackenzie, Machine Learners, p. 10

[68] Lintunen, Latent Spaces, p. 37

[69] Lintunen, Latent Spaces, p. 38

[70] Lintunen notes that “the internal state of hidden layers can be probed to an extent through interactive visualisations, as demonstrated through TensorFlow Playground, in Latent Spaces, p. 23.

[71] David Benqué, “Hyperland, a Scam in n-dimensions,” Conférence MiXit, Lyon FR 2019, https://mixitconf.org/2019/hyperland-a-scam-in-n-dimensions

[72] Mackenzie, Machine Learners, p. 6

[73] Karen Barad, Meeting the Universe Halfway (Duke University Press, 2007)

[74] Matteo Pasquinelli, “Three Thousand Years of Algorithmic Rituals: The Emergence of AI from the Computation of Space,” e-flux, 101 (2019). Available at: https://www.e-flux.com/journal/101/273221/three-thousand-years-of-algorithmic-rituals-the-emergence-of-ai-from-the-computation-of-space/

[75] Stephen Wolfram, A New Kind of Science. (Wolfram Media, 2002).

www.wolframscience.com/nksonline/toc.html; Sarah Imari Walker and Paul Davies, “The algorithmic origins of life,”. J. R. Soc. Interface 10(79) (2013), 20120869. https://arxiv.org/abs/1207.4803

[76] Federico Campagna, Technic and Magic (Bloomsbury, 2018)

[77] Lintunen, Latent Spaces, p. 51

[78] Mackenzie, Machine Learners, p. 49

[79] Barad, Meeting the Universe Halfway.

[80] Mackenzie, Machine Learners, p. 81

[81] Mackenzie, Machine Learners, p. 86

[82] Lintunen, Latent Spaces, p. 37

[83] Mackenzie, Machine Learners, p. 180

[84] Fass and McClymont, “Of Machines Learning to See Lemon,” p. 57.

[85] Benqué, “Cosmic Spreadsheets,” p.69–70.

[86] James Bridle, New Dark Age: Technology and the End of the Future (Verso, 2018)

[87] Lucy Suchman, Human-Machine Reconfigurations p. 285. (Cambridge Univ. Press, 2006)

[88] Rosie Braidotti, The Posthuman (Polity Press, 2013)

[89] Snaza et al, “Toward a Posthumanist Education,” p. 47

[90] Snaza et al, “Toward a Posthumanist Education,” p. 51

[91] Laurillard, Teaching as a Design Science, p. 93.

[92] Snaza et al, “Toward a Posthumanist Education,” p. 44

Acknowledgements

Thanks to the artists, Diana Laurillard, Eleanor Dare, the 2019 SIGCHI workshop on Human-Centred Machine Learning, and various peer reviewers.