Who’s steering the AI ship?

(No one: it’s a self-organising system.) Why cybernetics is still relevant today.

“How does a gathering become a happening, that is, greater than a sum of its parts?”

Writing almost a decade ago, anthropologist Anna Tsing is talking about mushrooms. She’s also talking about social groups, and about capitalism.

Now let’s go back 60 years. A television reporter is interviewing another anthropologist, Margaret Mead, about increasing automation. He wonders whether automation might be considered “socialistic” (for this is Cold War America) if it frees people up to do things other than work for private companies.

“Well it’s only socialistic if you regard every piece of progress ever made in the United States as socialistic,” she replies, and she goes on to give a strong case for automation. “We’ll have to change some of our conceptions. And we’ll have to realise that when machines do the manufacturing, we’ll be richer, not poorer.”

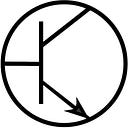

Mead was armed not only with decades of experience studying cultures outside America. She was armed with cybernetics: the study of how systems organise, regulate, reproduce themselves, evolve and learn. That includes social systems, technological systems, and natural systems.

You can see in her words above how it’s still relevant today. But nobody really talks about cybernetics anymore — it seems like a dated concept. If it’s still relevant, where did it go?

An answer comes from Mead’s daughter and another anthropologist, Mary Catherine Bateson:

The tragedy of the cybernetic revolution, which had two phases, the computer science side and the systems theory side, has been the neglect of the systems theory side of it. We chose marketable gadgets in preference to a deeper understanding of the world we live in.

Why cybernetics?

Why revive the systems theory side of cybernetics? Here’s Bateson again:

a lot of work that has been done to enable international cooperation in dealing with various problems since World War II is being pulled apart. We’re seeing the progress we thought had been made in this country in race relations being reversed. We’re seeing the partial breakup — we don’t know how far that will go — of a united Europe. We’re moving ourselves back several centuries in terms of thinking about what it is to be human, what it is to share the same planet, how we’re going to interact and communicate with each other. We’re going to be starting from scratch pretty soon.

That was 2018. As she tells it, systems theories enable dynamic, not static analysis — they point us not only to what’s wrong, but also to how we got here, and if we don’t change direction, where we might end up. It’s about not what things are, but what they do. About becoming, not being. Organism, not mechanism. Process, not product.

The technical side of systems is easy to see. Platforms such as Uber formalise some social relationships into technical systems. Ollie Palmer calls this the transgression of web tech into the real world.

Cybernetics provides a way of thinking about both of these worlds. According to the artist Hans Haacke, writing in 1971:

The difference between ‘nature’ and ‘technology’ is only that the latter is man-made. The functioning of either one can be described by the same conceptual models, and they both obviously follow the same rules of operation. It also seems that the way social organisations behave is not much different. The world does not break up into neat university departments. It is one super system with myriad subsystems, each one more or less affected by all the others.

This realisation is what drew together artists like Haacke with scientists, engineers and others, starting in the 1940s — the moment when world war funded the rapid development of computers, communications technologies and ideas such as Claude Shannon’s information theory. It’s important to keep those military origins in mind — they still simmer at the centre of such theories and the technologies they’re linked with. The name “cybernetics” comes from the Ancient Greek kubernetes — the one who steers the ship. So there are elements of top-down command and control involved. But let’s see what’s useful and relevant.

Things that think

Let’s get into some specific aspects of cybernetics. An unlikely one is that every system has a goal. I struggled with this at first: obvious for something like a technical system or a company, but what about a nonhuman organism, or a social system that organically emerges over time?

I found that if you dig deep enough, you can uncover something like a goal, with intentionality and even planning, in these systems. For example, philosopher Daniel Dennett describes nature as a designer, analogous to a human one but characterised by what he calls “competence without comprehension”.

How do systems achieve their goals? Through feedback and self-regulation. The classic cybernetic example is a thermostat: It has a specific temperature as a goal, and adjusts its behaviour until it reaches that steady state.

Philosopher N. Katherine Hayles characterises this as reflexivity. Such self-regulation, she observes, means there is information flowing through a system. So you can look at how much information flows through a system, and how quickly it can adjust its behaviour or its state to react to feedback. And here is a link between information, the materiality of the physical world, and energy.

This is what’s happening inside a computer, and inside natural and social systems — think of anything you can characterise as a system (your body, your workplace, school or university, a self-driving car) and you can analyse it by tracing these things. Conversely, if you design a system, these are very useful things to consider.

Hang on — think about this a moment. And as you’re thinking, your brain — as a natural system — is performing exactly the same actions, from a cybernetic perspective. Looking in the other direction, this means that other systems are basically thinking, as we understand it.

This radical thought came from Gregory Bateson (Mary Catherine’s father and Margaret Mead’s husband). It means that mental processes are the main organising activity of life. What’s happening inside our heads is just like what’s happening out in the world. The only difference is that natural systems do this “thinking” through their actions — through performance — not through abstract representations (as far as we know). If you accept that such intentional activity constitutes some sort of “intelligence”, it’s easy to see how creatures without a brain or a nervous system exhibit a degree of agency.

How about artificial systems? If this theory applies to computer systems, it might change how we think about “artificial intelligence” — specifically, it doesn’t have to mimic human intelligence.

Looking further back, writer James Gleick traces the history of information, and finds that the telephone network also operates in the same way — as a kind of social nervous system. As an active agent, he finds it responsible for the rapid industrial progress that followed its invention, a century ago. People, social groups, cities, regions and nations came to rely on the instantaneous communication the telephone made possible, because it saved time and energy (recall the role of these things in relation to information within a system).

The telephone, he shows, changed architecture — it made the skyscraper possible because if you could simply pick up a phone to communicate with someone instead of writing a letter or running next door, we could suddenly make buildings with offices stacked on top of each other, thus packing more people, stuff and information into one parcel of land.

In a recent article, I argued that nonliving objects have a kind of agency that “programs” people — they “want” things. We could characterise the telephone network that way

We are whirlpools

Let’s shift back to natural systems for a moment. Self-organising systems exhibit a degree of autonomy, they have goals, they want things. The goal of a living thing, argue Sara Imari Walker and Paul Davies, is to survive, to perpetuate itself — to fight against chaos or entropy (just as a message is related to noise in information theory). This, they argue, is how life arose in the first place: to simplify, stuff in a primordial soup started bumping into each other (a computational action that produces information, according to physicist Seth Lloyd). And at some point, two things started working together, exchanging information in a more decisive way.

Things accumulated, and at some point came a key transition: bottom-up self-organisation suddenly flipped, to gain a degree of top-down control: the thing became more than the sum of its parts. “More is different,” as physicist P.W. Anderson said. Information is the basis for life, the universe, and everything.

Within such a universe, a thing, an organism, even a human, is a temporary form for existing in the world — fighting entropy, chaos, and death as long as it can. “We are but whirlpools in a river of ever-flowing water,” said pioneering cybernetician Norbert Weiner. And maybe living even longer than our lifespan through information — for example in the DNA or ideas we leave behind.

In regulating themselves and making their way in the world, systems make intentional decisions. “Decisions are important,” writes Hayles, “not because they produce material goods but because they produce information. Control information, and power follows.”

Aha — you’ve heard it before: information is power. And where there’s power, there’s politics. Could you ever have imagined that politics could be applied to nonhuman systems?

Abstract≠real

It’s not so strange once it dawns on you that humans are, after all, animals, and thus to some extent driven by evolutionary impulses (like the drive to survive). Competition: it transcends the human species. One thing I learned in anthropology is to look for the roots of any human behaviour in our predecessors. And indeed, we see both cooperation and competition in groups of nonhuman primates.

We characterise other lifeforms, like some insects, as social animals too. But in a systems perspective, all individual organisms are themselves systems, composed of subsystems and part of supersystems. And we find examples of competition and cooperation well beyond so-called social species. Maybe, as with “intelligence”, we need to expand our definition of “politics”.

It’s easier to see politics at work in human-created systems. If we then apply the above argument to, say, a company, we know there is cooperation within this system and competition with other company-systems.

We also know that all systems have a goal, so what’s the goal of any company? If we regard it as a self-organising system, we can acknowledge that some humans intentionally started and lead the company, but if we take that algorithmic definition of life posed by Walker and Davies, at some point there’s a flip — from bottom-up to top-down organisation. It becomes a self-regulating system with its own goal. What’s the goal? Survival — to be that temporary whirlpool in the river of capitalism, fighting off chaos and entropy while it produces — guess what — information. Information that goes on to shape the future. Survival for a startup means going public or getting bought by Google.

When we link politics and economics and systems theory like this, we’re in the realm of ideology. What’s ideology? It’s a system of ideas — literally.

Hayles discusses the notion of downloading the contents of a brain into a computer. This is a wacky idea because it takes Shannon’s information theory at face value, treating information as an abstract entity that we can perform mathematical operations on. But since humans are also animals, information in brains isn’t quantifiable like that; it’s tied to biological processes, which we still don’t understand very well.

“Thus,” she concludes, “a simplification necessitated by engineering considerations becomes an ideology in which a reified concept of information [in which the abstract is considered real] is treated as if it were fully commensurate with the complexities of human thought.”

Level up

I hope you can see by now that cybernetics provides a useful way of looking at things. But here’s a strange question: When we look at things, is that all we’re doing? David Benque, one of my former PhD students, writes: “The ‘easy certainty that knowing comes from looking’ is rooted in the same problematic positivism which underlies predictive systems in the first place; the idea that an objective and stable truth can be observed or revealed.”

Predictive systems? No stable truth? No objectivity? Could we be part of a system ourselves, just by observing a system from the outside? That’s exactly what Margaret Mead said. Her radical innovation was to apply cybernetics to cybernetics itself. Observation is communication — like any system, it generates information, it’s goal-directed, it can be self-regulating, it has feedback and subsystems and is part of larger systems.

This is called second-order cybernetics. With Mead’s insight, the observer is implicated in what they are studying. They cannot be separated from the system under observation. Gregory Bateson added that all experience is subjective.

What is the goal of the observer-system? Observers describe. This description is typically provided to another observer — a scientist, for example, publishes her findings in an academic journal. “The description requires language,” write contemporary cyberneticians Hugh Dubberly and Paul Pangaro. “And the process of observing, creating language, and sharing descriptions creates a society.”

Interestingly, this insight about the subjectivity of the observer arose independently in physics — specifically quantum physics, when scientists realised that simply observing a particle could change the particle’s behaviour.

Physicist Fritjof Capra notes that this shifted physics from an objective to an “epistemic” science. Epistemology is about how you come to know what you know, how knowledge is created. And he describes how this knowledge-production process “has to be included explicitly in the description of natural phenomena.” He quotes fellow physicist Werner Heisenberg: “What we observe is not nature itself, but nature exposed to our method of questioning.” (Karen Barad is also good on this.)

So first-order cybernetics is the science of observed systems. Second-order cybernetics is the science of observing systems.

And because our acts of observation are often aided by technology, Joanna Zylinskaargues that human “intelligence” has always been artificial. Is the human eye a tool, or is the camera an organ? After all, she points out (referencing philosopher Villem Flusser), the eye has its own program, and the camera is not neutral.

But how do you feel?

Biologist Humberto Maturana added a new wrinkle. “Anything said is said by an observer.” The observer is not neutral — s/he observes from a position, and changing one’s position changes your view of the thing being observed. “Position” here can mean literal, and geographical. But it also is ethical, and emotional: Do we like what we’re observing? And because the observer can affect the phenomenon under observation (I know this from observing nonhuman primates during my anthropology training), the observer is not only implicated, they have some responsibility.

“We human beings,” writes Maturana, “can do whatever we imagine…. But we do not have to do all that we imagine, we can choose, and it is there where our behavior as socially conscious human beings matters.” We don’t only observe, but we’re (all) responsible for the world we live in, and the things and the information that we put into the world. Not just steering towards a goal but discovering goals — learning what matters.

Going back to the question I posed at the start of this article — Where did cybernetics go? — one answer is that it became embedded in science, and in design. Where scientists observe, designers create. Charles and Ray Eames, for example, were keen systems thinkers. Christopher Alexander and his students revolutionised architecture (and software design) with their pattern language — he regarded systems as a kit of parts: systems generating systems. Today, artist/architect Patricia Mascarell merges social systems with architecture and textiles.

Recent cyberneticians like Dubberly and Pangaro, Ranulph Glanville and his student Delfina Fantini van Ditmar, all regard design and cybernetics as pretty much the same thing: everything designed is part of a system, and is itself a system. As Dubberly and Pangaro write, “If design, then systems; if systems, then cybernetics; if cybernetics, then second-order cybernetics; if second-order cybernetics, then conversation.” (Conversation is a whole other big topic I am aiming to tackle in a future article.)

According to Zylinska:

Cybernetics offers us a systemic view where all dimensions are entangled and communicate with each other. But feminism and post-colonial critique show how we need to stop and examine particular moments within this system.

[…]

not all systems are born equal, have equal tasks and equal forms of embeddedness. For example, second-level cybernetics missed out on interrogating more deeply the cultural dimension of systems. It did recognize the existence of cultures, but it failed to grasp those cultures’ agency — as well as their transmissibility across generations.

These feminist and post-colonial critiques helped shape what is sometimes known as third-wave cybernetics, which includes theorists like Hayles. It brings cybernetics up to the 1990s by connecting it with cyberspace on one hand, and the body on the other. Cue Donna Haraway, for example.

The art of cybernetics

I want to conclude this article by mentioning one other area cybernetics disappeared into: art. What prompted me to revisit the topic was an event at the Institute of Contemporary Arts in London commemorating its 1968 exhibition Cybernetic Serendipity, and exploring its relevance in the age of AI.

So, to go back to the other question I posed at the start — Why understand systems? One answer is, because we can make interesting art. The exhibition mostly displayed computer art of that era (I discuss one such piece here), along with technical information, and a thick special issue of the magazine Studio International contained much more.

In art, the influence of cybernetics goes far beyond machine aesthetics and using the computer to make art. (For machine aesthetics, look up the 1934 Machine Artexhibition at MoMA.) Critic Jack Burnham noted already in the 1960s that the proliferation of real-time systems was increasingly abstracting the concrete materiality of things into information:

[A]rtists are “deviation amplifying” systems, or individuals who, because of psychological makeup, are compelled to reveal psychic truths at the expense of the existing societal homeostasis. With increasing aggressiveness, one of the artist’s functions […] is to specify how technology uses us.

So all that stuff about systems, observation, ethics, subjectivity and responsibility — it was there in the 1960s with artists like Hans Haacke and Gustav Metzger. The artist and the work as a system, questioning the gallery system, social systems, the art world’s connections with capital — you can see this in social practice artists like Agnes Denes and Mierle Laderman Ukeles back in the day, Maria Eichhorn and Pilvi Takala today.

To take just one example, artist Anna Ridler made Wikileaks: A Love Story when she was my student at the Royal College of Art. In the trove of documents leaked by Julian Assange, she discovered a romance documented in an email trail between two US government employees, and made it into an artwork via augmented reality — remember that bit about the camera being implicated in our act of observation? Artists are masters of drawing our attention to systems, not shying away from the ethical issues, and gathering up elements that become something more.

Let’s add on to Anna Tsing’s quote at the top of this article, to answer her own question:

“How does a gathering become a happening, that is, greater than a sum of its parts? One answer is contamination. We are contaminated by our encounters; they change who we are as we make way for others.”

Want more? I wrote an article analysing the Covid pandemic using the systems theory of Donella Meadows.

A note about these articles, and some advice:

These articles are meant to be informal, introductory and fairly brief. If you do any kind of research, you know that on any given topic, there’s an endless amount of reading you could do — it can go on forever and you can lose months disappearing down one wormhole after another.

I used to tell students to try and balance input and output — try to spend about half of your time on each. If no one is setting a deadline or deliverable for you, set these yourself. And if you are getting close to a deadline and are still collecting stuff, maybe it’s time to start generating some output. Get to a “minimum viable product”, then, if you have time before the deadline, you can add to and improve it. This goes for artworks and presentations as well as writing.

So for these articles, I told myself I would only use my existing notes and previous lectures as source material, and not do any new reading. Later, I might add to them as new info comes in. Or add new articles.

Systems theories like cybernetics have been a core topic for me over the past decade. I’ve done lots of lectures, seminars and writings, so I’ve got lots of notes. Even as an overview that just touches on a few key topics, I hope you found this as rich as I did in going through it all again.