When robots rediscover biology

An interview with Mark Tilden

I interviewed Mark Tilden in 2000, when he was creating self-sustaining robotic creatures as part of his research in the Physics Division of the U.S. Los Alamos National Lab (yes, where the first atom bomb was built). His devices — from tiny, insect-like bugs to towering, walking bipeds — do not contain computers, only minimal electronics. They’re based on principles of biology, and designed to survive for long periods of time without human help, in extreme environments such as minefields and outer space. This interview took place at an exhibition of his robots at the Bradbury Science Museum in Los Alamos, New Mexico.

Tilden:

I study complexity maps of automatic intelligence. That is, trying to find a different way of making things that move around the world, that think in a very different way than algorithmic responses.

So no programming, no computers. Just use ’em, and abuse ‘em.

We started out years ago on the assumption that rather than try to evolve a robot from some sort of intelligence, which has always seem to have failed. We would evolve robots by themselves, starting out with the very simplest of devices and then working our way up, to see if we could eventually try and build humanoid-like devices that would interact with us on our own level, all the time keeping them minimal. That is, whenever something got too complicated, we basically would pull back and go somewhere else.

The devices wound up being very simple and very modular. They were devices like this — clusters of little neuron arrays that would power themselves up, and what we do is analyze the different behaviors we get out of them. It was complicated.

What was very interesting, though, is that when we took these patterns, and mapped them onto legs, like onto this little walking creature here, and they would start to move. You can see where it has gone by looking at its path, in the tracks leftover in the sand.

This is pretty typical of the sort of thing that we do — build a variety of devices, and then leave them powered on for periods of—not days, until the batteries die—but for years, or until they undergo catastrophic damage. This little device has been trapped inside this container for about six to eight months.

We started out with little, amœboid-like devices. We worked our way up, through a variety of simple little rolling devices, to walkers, and head mechanisms which were able to see themselves.

This is one of the world’s smallest autonomous, walking robots. It was, unfortunately, dropped by one of my secretaries, so it’ll never work. But it’s made out of Snoopy watches by Lorus.

This is one of our latest prototypes in satellite mechanisms, a device that, when it powers on, it builds up a charge through these solar cells, and then starts tracking bright things in its immediate environment.

One of the cool things, if you go down here below, you’ll see all the variations on the theme that we worked on. The gold-plated ones are essentially experimental prototypes that allowed us to explore various forms of symmetric control structures. The older ones — the ones that are basically hand-built — are the ones that are first-generation devices, and they’re still in operation, although we’ve had to glue them down to the table here, so they wouldn’t wander around in the exhibit.

This larger one right here was one of the prototypes for our de-mining project that was sending robots out into mine fields, to see if they could find mines, which would save human beings’ lives.

That’s your brain on legs.

Bio-inspired

The whole thing we’ve found out with this technology was not just how many varieties you can get, but how quick it was. Typically, building a robot has always taken about 18 months of manpower and programming, and we’re able to reduce that complexity characteristic down to less than 20 hours of work, and 40 hours of something you’d never done before.

So as a consequence, we have over 500 robots. So many, in fact that we’ve had to stuff them into museums across the country, because they were dripping off of the shelves in our laboratory, and kids kept breaking in to see if they could get a look at the bugs. That was against laboratory policy, so unfortunately we had to start doing some other things to compensate for it.

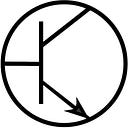

The history of the devices are based upon a rather interesting science. All digital electronics, and all modern electronics, are based upon what we call “Manhattan logic.” For example, if you take a look at the history of computing, you have slide rules, abacuses, digital devices. These devices are all based upon Manhattan-like structures. That is, they’re all 90 degrees to one another. If you ever look at the city of Manhattan from the air, you realize that all electronics is based upon 90 degree angles.

But if you take a look at biology, it’s always based upon hexagonal angles — that is, the Law of Three. And what we found, which was very interesting, was that when we started building and analyzing our devices, that’s exactly the sort of pattern that wound up emerging — the best possible qualifications of ability for devices.

By working in groups of three rather than two, you wind up getting a completely different sort of decision variable. That is, something that can make a decision by itself.

Why does it do that? When you have, say, two digital mechanisms that are sort of talking back and forth to one another, they can either agree or not agree. But if you have three, then two can basically gang up on the other. So there must be a decision made by at least two, so a majority always rules.

And if those things are subject to all sorts of different characteristics, you can see the transition between hand-built devices like this — this is my home robot window cleaner which worked for seven years, before it just wore itself out.

Then we work our way to the new generation of hexagonal devices. This one here shows the nature of the new generation of controllers — small, robust, extremely tightly connected. They’re also extremely cheap and minimal, the way they work. Individual ridges are the transition points where information actually flows across.

The bottom line is, the device winds up thinking like a biologic. And because of that, we can actually assign to it abilities that you would not normally associate with a digital device, outside of algorithmic extremes.

The point is, you can make devices that you can throw onto a giant sand dune, and know that it will walk toward the camera…. It freaks out a lot of people when I deploy my devices, and I just sit there with my arms crossed while we watch the robot do its thing, without any form of radio control.

This shows the new generation of microboards that we worked with several years ago, reducing these controllers down to something that’s barely larger than a stamp. And this is set up into all different arrays, or broken up into some of the larger walking and tumbling creatures we did under a DARPA grant, trying to come up with devices that were extremely capable of negotiating complex worlds all by themselves.

This is the world’s only operable HAL-9000. The device is very interesting, because one of the things that we found that was an emergent property of nervous networks is that you can use the motor as the measure. That is, the motor — the thing that acutally causes the activation, or the thing that is actually creating the appropriate illumination, in this case is also the thing that is responding to the illumination, to the signal that we’re giving it.

This device is supposed to be talking. We built it as a special effect for the HAL-9000 birthday party in 1997.

The neat thing that we’ve found is that there are very few things that we can’t build with this technology, on any functional scale, from extremely small to very large.

This was the classic example. This was the only meter-long robot ever to go out onto a test range, for military purposes. The idea was to use these magnetic detectors, and these things, to be able to negotiate its way. The point was for it to actually step on a mine, get a leg blown off, and then pull itself around like robot roadkill as it moved around.

That was the original intent. We did some tests, and it worked very well. But unfortunately, the soldiers didn’t like it, because nobody likes to think that a machine could possibly take their job. So currently it’s nailed to the wall, as the Last Temptation of Robby.

We hope to be able to restore this idea sometime in the future, when we can actually get machines out there saving lives, rather than trying to pretend that they’re trying to take one.

It’s a very important thing, because right now there’s a fantastic robot fear. I mean, here is a robot that was designed to save lives; right behind it is one designed to take cities [atom bomb display]. So, you can see the dichotomy between the two different types of robotics that we like to get into.

Living with robots that require new batteries or new programming is always a real problem. But living with robots that do what they do because they must do it — that’s much easier to work with, and all I have to do is remember to put my floor-cleaning robots in the dishwasher once a year to get the gunge off.

Q: Would you say that these robots have some type of creativity?

A: As yet, we have not seen that. What we have seen are devices which we call the queens, which are one of our nervous net controllers, embedded into another form of nervous net controller. And what that allows us to do is make devices that are, in fact, capable of learning, that are subject to moods.

And it’s really quite fascinating. You have a device that, when you power it on, and you treat it carefully, it will actually realize, “Oh, human equals good.” And it’ll follow you around. Power it off, power it on, treat it like a grad student — beat it around, push it away, and it will quickly learn, “human equals bad.” And it will run away into the dark. And as you chase it, it will run away. It’s actually learned from its own experience.

It might take it some time. Is that creative? No, it’s nothing more than a sample survival response at a higher-order level.

We would like to build devices that do show a degree of creativity. But the one thing that we’re pleased with right now is that you can build a device that negotiates a complex world, has only 12 transistors — that’s not even enough to build a good radio. The queen I was just telling you about was 24 transistors, which is barely enough to build a radio.

We’d like to build higher-order ones, things that actually have functional heads and arms that can negotiate extremely complex worlds, and then see just how close they are to mimic the sort of facilities that we find in simian and, of course, anthropomorphically correct creatures.

Then, on top of that, we might put in a computer that says, “What’s going on here?” Whether or not they’ll be creative, we’re not quite sure. I never make any claims unless I have a robot that proves my point, and I find that very necessary. I am not a philosopher, I’m an experimentalist. I build; if it works, then I’ll show you. If you don’t believe me, I’m on syndicated reruns from Hell.

You’ve built some that can replicate themselves, or at least rebuild themselves.

Yes, that’s right. That was done by one of my students. I think that’s a very dangerous thing — any sort of device that has to rebuild itself if based upon a crystalline entity. Unfortunately, that makes them supercritical. If one bit or one cog or one gear falls out of place, the system falls apart. And that’s something which I’m very much against. A computer is a single bit-flip or a wire-cut away from immobility.

We’re looking at biological systems that can take massive damage, and that’s a very important thing. You want a device that’s going to last for ten or twenty years, you want to know that if it loses, say, half its brain, it’ll still be able to, you know, fetch you a beer. That sort of reliability is what you’re going to demand in the future, especially when we start using these things not just for de-mining problems, like finding unexploded bombs, but finding things such as nasty bits of radioactive waste from the next Chernobyl.

There’s a scary aspect of this — computers that can replicate themselves. The Terminator scenario, where the computers are competing with humans.

Many, many people talk about the self-replication aspect, but I’ll tell you right now, the easiest way for a robot to build another robot is to get a human being to do that for it. The real thing is that we’re built from carbon — everything on this planet is built from carbon, because it has the lower energy valence that allows it to do so.

Robots can’t build themselves, because the heat and the energies required are just so incredible that you would essentially melt the factory, or melt the egg, or melt the poor little robot womb, before you could get an appropriate birth.

The bottom line is that, for the forseeable future, we will make robots, and then they’ll go out, and as they die systematically, ultimately there will be fewer robots in the world. Believe me, if there was a way to get robots to automatically reproduce themselves, somebody would have gotten the Nobel prize by now.

There’s a big fear right now. My friend Bill Joy wrote this article which was basically, oh, “Attack of the Killer Robots.” At a recent major exhibit I did in London, I held up that article and said, “Bill, get a life.” The fact is, even if the machines could reproduce themselves, even if they were aware of humans, what on Earth makes you think that they have the motivation to try and destroy mankind?

We found from experience that, if you’re a robot, and you get up in the morning, and you have no gender — so you don’t require sex — and you have lots of light so you don’t need food, and you have lots of floor, why the Hell do you have to move at all? We found that our biggest problem was motivating devices to move, to give them an appropriate survival gradient so they would. Believe me, no matter how smart we make our devices, we will never have to worry that our toaster is making plots against us. Unless, of course, it’s being run by Microsoft.

Let’s talk nuts and bolts a little. What are the rules that drive these things? It’s not Isaac Asimov’s robot rules, right?

No. Asimov’s rules were essentially: protect humans, obey humans, then look after yourself. During the 1980s I built a variety of robots like that, and I wound up with robots that were so scared about doing anything that could possibly displease human beings, that they just sat in the middle of the floor and vibrated. My cat had a field day with that.

Our rules are basic rules of survival: feed thy ass, move thy ass, and look for better real estate. (With another rule — get some ass — you’ve got the basic rules for a good weekend in Las Vegas.) The whole point is that you give a device the essential necessities to survive under duress, and then you domesticate it later into actually doing a task for you. The same way that you stick a carrot in front of an ox to get it to pull a plow, you can stick light in front of a solar-powered robot to get it to clean your floors.

That’s a simple analogy, but it’s very accurate in this case. It cannot be aware of anything greater than itself. Therefore, we only make certain that it is only aware of itself, its particular needs. And then we modify those for a particular task.

There’s no central processor that’s driving these. How exactly does the processing work? It’s distributed across all the parts, right?

Exactly right. It’s one of the biggest things that we’ve had to do, to try and figure out the different sorts of patterns and nervous systems that allow for distributed processing.

Now, in the old days, the real problem with computers was the thing we call the complexity barrier. That is, for a linear increase in ability, an exponential increase in cost and complexity was required. Anybody who’s ever tried to buy a CD player finds that the price goes from $100 to $1000 when you add just one more button. That’s the problem with digital electronics — and of course, conventional digital applications.

How is it, then, that we can essentially grow, from really small babies, up to creatures with the sort of facilities we have now, and not have to worry about the fact that it costs us exponentially more, every time we learn to pick our nose without punching our lights out? A lot of science fiction people seem to think, “Eek! It’s the brain.” No — a human being is everything that you are.

I’ll give you a classic example. My friend Mark Dalton lost his finger when he was very young, in a chopping accident. It’s really funny watching him try to scratch his nose, because he always misses the first few times. And then finally he goes to the middle finger. The thing is, after 30 years, he still thinks there’s a finger there. Everything that we are is everything that we are. And that’s a very important thing. We’re not just some sort of computer, driving some sort of body, like a person driving a car. We are, in fact, a distributed, homogeneous, connected system.

The best way to feel this is: Have you ever been in “the zone” in a game of soccer or a game of tennis, or something? All the body is working together, and you are just up there. It’s not because the brain has done all the appropriate calculations, it’s because the body has fallen into it.

When do you fall into that zone? It’s when you are comfortable everything that you are, that you can optimize your particular applicability. That’s something where every system in your body — from your toes all the way up to the end of your pineal gland — knows exactly what’s going on. And it’s a fantastic and exciting thing.

And that’s actually something else. Remember when I was talking about motivation? What does it take to motivate a complex, distributed controller? It’s when things work together smoothly, when there are no conflicts between systems.

This is why learning something is such a bother for us. If I had to go out there and try and learn tennis, an instructor would be saying, “Oh, don’t hit it so hard, step back,” you know, “Relax!” Don’t you hate it when your teacher says, “Above all, relax!”

What she’s trying to do is tell you that that is, in fact, your goal. Your goal is to be so smooth with a particular attribute of playing a game that the barriers between the individual systems that allow you to play that game — that is, between your hands, your arms, your body, your legs, your spine, even the balance mechanism in your head — wind up being so beautifully synchronous with one another that all of a sudden you wind up getting an amazing high-efficiency device.

And that is something that is a very interesting spec, because if you look at everything that interests us, it’s always because they fall into our particular motivation gradient. So, why distributed control? You now have devices which can have a motivation to get up in the morning and start cleaning the floors, as compared to just being an algorithm, which is being forced into them by some sort of computer.

You mentioned CD players. Tell me about some of the parts that go into these things. These are not expensive devices, right?

Some of the latest ones are. What’s funny is that we originally got started by building things on dead Sony Walkmans, calculators, VCRs. This one is built entirely out of dead Walkmans, recharge mechanisms from old Sun computers, and these wires are laser printer cartridge door wires. The solar cell is from dead calculators. We found that the flotsam and jetsam of modern technology give you a lot of the technology you need to get started building these things.

We run something called the International BEAM Robot Games, and we have hundreds of web sites where we promote these very things. It’s basically, “Here’s a simple circuit. It’s the engine. Dead Walkman, dead calculator — build something that moves.” That’s the first, protozoic machine. Work up from there, and there are some pictures, examples, plans, kits, and the science that goes along with the nature of distributed, synchronous, efficient control.

We started out with these things, and they were very cheap. In fact, many people would actually pay me to take the junk off their hands. Dead Walkmans and CD players turn out to be the best possible source of appropriate parts for these things. That and a screwdriver gives you all the bits you need.

When we started going up to much more sophisticated work, doing some development and research work for the U.S. government, we found out what the acual cost of these things really was. The contradiction is, it’s easier and cheaper to buy a $100 Walkman and tear the motor out, than to buy the motor itself, which is close to $150 and six weeks wait from Japan.

How do the legs work? How do these things move?

What we found, originally, was that the signals that are generated by these things wind up being aperiodic. In other words, they move backwards and forwards. Well, if you have these things driving wheels, it creates machines that move backwards, forwards, backwards, forwards. But the problem with wheels is that there’s a very limited ability and dimension. That is, they can’t travel in all possible directions at the same time.

And anybody who’s trapped in a wheelchair will recognize this. When you have legs, you have the power of translational motion — you can travel trapezoidally, sideways, backward, you can spin around in place. This is the sort of thing that you don’t think that you’ve got, until you do something like, say, walk down a hallway, and there’s someone walking toward you, and all of a sudden, you both realize that you’re doing this funny little dance. Now, of course, in a wheelchair, that would mean you would have a head-on collision. Because we can negotiate and translate has allowed us this degree of flexibility that we require.

When these things needed better flexibility, giving them legs meant that, all of a sudden, they could be moving this way, but they could be travelling that way. That turns out to be very important — to be able to see what it is that you want, and then be able to move around things that are immediately localized to it.

Classic example we use is: You see a friend in a bar. You know where she is, you see her all the time, but you have to negotiate through a bar, and all the people who are there — a lot of those are moving objects. That’s an incredibly sophisticated thing.

Think about the Law of the Mall. Why is it that cars on a highway have to stay a car-length away from one another, otherwise you get a crash. But in a mall, people can miss eachother by a matter of millimeters, and we don’t even think anything about it. It’s because of the complexity and the ability that we have in negotiating ourselves. If cars could do this — translational control — then freeway accidents would be a thing of the past.

Some of your devices work together, right?

Yes, we have cooperative robots, and we’ve been working on a variety of self-assembling and self-modifying machines for years.

The control mechanisms that allow these things internally can also be separated by a significant distance. So long as they have some sort of knowledge of one another, they fall into synchronous patterns, and it’s really quite interesting.

When you look at, say, people walking down a street, how can you tell if they’re all walking at the same speed, who is associated with whom? It’s the people whose legs are in step with one another. Those are the buddies who are coming out of the same store. Couples always walk in step.

Look at people who are not in step with one another, and you’ll realize that, even though they’re travelling the same speed, they’re not synchronous, so therefore they’re not related. It’s a very strange relationship, but it does actually happen.

All of these things in here are acutally alive, right?

It all depends. We’ve had to glue some of them down, because even though they’re broken and dead, they might still twitch themselves off into the sand. So the display ones are held down by glue to keep them from going. This one right here, though, is alive. It powers on every now and then. But because the incidence of light is orthogonal to the solar cells, it’s building up a charge, and moves only very infrequently.

But the cool thing is that you can place them inside an environment like this, and over a period of six or seven months, they’ll be just fine.

What is your home life like? You’ve got some of these things at home, right?

Yeah, I’ve got a few. The original idea was to build robots that would, essentially, keep my bachelor pad clean. My original robots wound up eating toilet paper, which wasn’t very good for us, and got pretty annoying, because we found out that, no matter what sort of program you gave it, you couldn’t convince a machine that the cat food was not just something left on the floor, but was meant for the cat.

So in 1989, I built my very first home-cleaning robot, which was about the size of a pack of cigarettes. My cat immediately destroyed it. But that led me to start building some more. Now I have about 40 or 50 devices, everything from wall cleaners, electric wallpaper, just things that I can live with.

And this is really interesting. A lot of people have said, “It’s the future, where’s my robot?” Well, the fact is, it’s not something that you would expect. The future robot that you will live with is not going to be a wisecracking robot like the Jetsons’ robot maid. Instead, it’s going to be things that are small, and invisible, and they’ll live in your house, and you won’t notice them until they don’t work.

I’ve been living with devices for ten years, and I don’t even pay any attention to my machines unless they’re not working. I just pick them up, put them in the dishwasher once a year, and then I take them out, and I just throw them around.

That’s where I’d like everybody else to be. My vision of the future of robotics is not some sort of R2D2s or Commander Datas walking around, basically fetching you a beer. But something where, when you move into a new house, people have left behind the robots in the same way that they’ve left behind the lightbulbs. Not because they didn’t notice them, but because they were such an implicit part of the house — you wouldn’t want to take them away.

And so the house of the future will be a place where your windows are clean, your floors are clean, your cockroaches are nervous, you’ve got standard illumination, you don’t have any complex wires, your remote control always has the power it requires. Your house just basicallly cleans itself. And the longer you’re away, the cleaner it gets. That’s a feature, not a bug.

The robots are getting smaller, right?

Not really smaller. What happens is, there’s a minimum size beyond which you can actually do these sorts of things. What we’re trying to do is make them more efficient, and the smaller you are, generally the more efficient you are, up to a minimum scale.

We’d love to start working on colony devices, with devices, say, a millimeter or so across. So you’d actually have intelligent dust. The medical applications for such a thing are fantastic. If you could swallow a pill that could do surgery — swallow a pill filled with robots, it does surgery on your body, and then you piss it away. You don’t have to worry about it taking over your body, because all of the robots that go in are the robots that come out. And that sort of thing is extremely popular right now. But unfortunately, I’m the only person in the world who has the controllers small enough to put in something that small.

There are some amazing advantages to nervous net technologies. You can’t reduce a computer down to the size of a human cell and expect it to run any sort of operating system — just imagine the size of the keyboard it would require. But the fact is, you can build these devices which will, hopefully, get into the body, and do something intelligent.

Unfortunately, people are just held back by the inertia of the 20th century perception of robots. We still believe in Isaac Asimov, we still see George Lucas come up with a new movie every three years.

I’ll tell you from experience: R2D2 — cute little trash can in the movie for two hours. But living with a little, bleeping box for several weeks? Man, you’d claw out the speaker with your fingernails — it’s so annoying.

This is the reality: When you have to live with something, it’s significantly different than when you have to build something that looks good for TV. And I’ve built for TV. The thing about building for TV is that they don’t give a damn if it works for real, they only care that it looks good enough until Arnold Schwarzenegger blows it away. What type of future is that for modern robotics?

Most people get into the field of robotics because they saw an amazing puppet in a movie, and they want to build Johhny 5, they want to build C3PO, they want to build a Frankenstein monster. But when you look at it, you’ve got to remember one thing — Issac Asimov was a fiction writer. All science fiction robots that you’ve ever seen on TV are puppets — Jim Henson-style special effects. And if you ever see a robot with a cable going off of it to a curtain, you are looking at a Wizard of Oz demonstration — “Pay no attention to that supercomputer behind the curtain, I am the great and powerful roboticist of Oz!”

It’s not until you can actually hold something and see that it is moving by itself, for itself, that you can actually start to make an assumption that it has the appropriate competence to do some work for you in the world.

So, are these things expanding our definition of intellegence?

They’re not expanding our definition of intelligence. It’s actually a major controversy in the fields of artificial intelligence and general cognitive studies. Artificial intelligence is based upon the concept that thought is rational — that human beings are innately rational. Well, if you’ve ever dated some of the people I’ve dated, you’ll suddenly realize what a fallacy that is!

The thing is, there are so many different variations on the theme. We’re under the assumption — and this is backed up by some empirical evidence — that we are irrational creatures, who basically have irrational centers to us. And that is what allows us our degree of creativity, and also allows us one of our fundamental definitions of consciousness.

What is one of the fundamental definitions of the rights of a conscious creature? That is the ability to be able to do what it needs to do or what it wants to do. That’s essentially what satisfies the basic tenet of life. It’s not enough to just be alive, it’s only enough to be alive the way that you want to be alive — Jean-Paul Sartre, 1911.

Then extend that to a conscious thing: Build a machine that wants to do work for you, that is optimized so that, within its little world, it wants to live in a world which, basically, makes you happy. And you can sell it off the shelf. Now that might be a future of robotics, rather than something that is waiting for you to program it — “Aw geez, honey, do I have to program the computer again?” What sort of future is that?

Kids already complain, “Do I have to empty the dishwasher now?” Labor-saving devices don’t. The robots that are going to live in our real world are going to be different from what you normally expect. And it all starts with a paradigm shift in the way that we think about thinking. And that is, we are not rational creatures.

How does the concept of memory work? How do these things have memory?

They don’t have memory. What they have are transition states between complex de-orbits. When you have a nonlinear oscillator, it sets up a very complicated orbit.

Say, rolling a marble around on a sheet. And as it rolls, it never rolls to the same place twice. Now you lift up one end of that sheet, and it’ll still roll around, but it will roll in a very different place. But there’s a transition time between the orbit it has on one level sheet and another that it has on a slightly tilted sheet. That transition time, by coincidence, looks like memory, because if that sheet goes down before it has reached the new orbit, it will quickly fall back into the previous orbit.

This is our big problem. In 1996, when I first characterized this phenomenon, I thought it must be some sort of Hebbian memory; it must be some sort of learning ability. But here it is five years later, and a lot of math under the bridge, and we cannot mathematically tie in the stabilization of nonlinear chaotic orbits to anything approximating Hebbian self-organization characteristics.

The bottom line is, it’s not memory in the conventional sense, and we cannot prove it to be so, unless we find some major mathematician who can take Nth order derivative nonlinear differential variables and convert them down to classic Hebbian neural network hotfield translational states. That’s like trying to mix apples and oranges and get chocolate sauce — it just does not work.

This is what is so interesting. It’s so coincident, and it’s so convenient a behavior. I mean, it’s obvious. You set something to walk, it learns to walk on carpet, then it walks on linoleum, and when it goes back to walking on carpet, it basically “remembers” how to walk on carpet. It doesn’t have to learn again. That’s just so coincident. I can’t help but think that there must be something there.

But at the same time, we’re not certain if it is a new phenomenon, or it’s an old phenomenon, or it’s the same phenomenon that biology uses. The fact is, until we know, we can’t be certain.

But there is a correlation. Human brains, for example. Human beings don’t remember anything. What I mean by that is that we don’t remember things, we construct things. When I say, “a horse running in a blue field covered in pink polka dots,” you actually create that image in your head.

But there’s a dichotomy there. Is it the field that has pink polka dots, or is it the horse? Most people assign it to the horse. What you are actually doing is creating those things right inside your mind. You made a vector decision during the statement of that sentence that allows you to recreate that inside your head by construction.

Now, chances are, you’ve never seen something stupid like that, even on TV. But the fact is, you’ve probably got a very accurate rendition of that stupid, polka-dotted horse right now. The thing is, did you remember it? No, you created it.

And that’s a very interesting analogy, because if your memory is, in fact, an incredible cluster of complex orbits set up in your head, then what happens is that the difference between those orbits and the things that are created — as compared to those that are remembered — is very, very shallow. What’s the difference between something that is created and remembered in complex orbits? Just another orbit.

And the fact is, this might be exactly what gives us our ability to create — why human beings can create so easily, whereas machines find it so very hard.

Are these robots completely analog, or is there a digital aspect to them?

We try and emphasize analog mechanisms. We take digital electronics, and force them into an analog mode. That gives us backward compatibility, should we wish to put a digital controller on them.

And in fact, for a lot of our sponsors, that’s exactly what they want. They have some sort of a device, or a computer which gives a certain response they want. But they don’t know how to make it so that it can actually work in the real world. So they come to us, we say, “Okay, we’ll whip up the interface. Here’s the interface from your computer, the interface talks to the nervous net, the nervous net talks to the body, the body negotiates the world.” So there is, in fact, a digital aspect.

We’ve never turned our back on this. In fact, what we do is called nervous network engineering.

But when we do our research, my main interest is trying to find out what is the most possible arrays of competent behavior you can get from the smallest possible arrays of functional controllers. If I can show you something that, in 24 transistors, basically behaves like a little Young Frankenstein, that’s something small enough for you to understand. It’s not something like, “Oh my goodness, I have to memorize everything from the latest Windows 2000,” it’s something that you can see.

And strangely enough, when you see these things, and the blinking lights move, it’s almost impossible to analyze. And when we take the data out, it’s almost impossible to analyze. But when you see the legs move, even children can understand it. And this has led to what we call our new generation of robotics standards for competence.

The Turing Test is a test done by Alan Turing, which says that if a computer can convince you that it is alive through questions and answers, than it has satisfied the Turing test. We came up with something called the “Purring Test,” which is that if a robot is capable of delivering enough real-world competence to fake out a cat, then you know that you’re on your way to building a truly autonomous life form.

I’m a little surprised that you don’t work down the road at the Santa Fe Institute [center of the artificial life movement].

That was, in fact, my dream. In 1993 I did something very famous, and somebody said, “You ought to come down to the states” [from Canada]. So I loaded up the truck and I moved here. And I was really hoping that I would start working for the people at Santa Fe Institute. But what happened was, they hauled me in and they showed me some of their artificial life simulations. They showed me Swarm, and I looked at all these things, and they said, “Okay, build that. Build robots that use Swarm.”

And I looked at Swarm and I suddenly realized everything they used on the computer model was weightless rope, frictionless pulleys, infinite power sources. All the mathematical idealisms that work very well inside a computer, couldn’t work in the real world to save their lives. There are no straight lines in the real world, but there are, forever, inside computers.

So I turned to these people like Scotty in Star Trek, and said, “I’m sorry, Captain, I cannot change the laws of physics!” They thought I was just being obstinate.

Take a look at all of the work done at the Santa Fe Institute, and you’ll find out that it can only exist inside computers. And when they say that it might have relationships to what happens in the real world, start analyzing it from a physical point of view, and you suddenly realize that they just want bigger computers because they think that’ll make it work better.

But reality works on very different rules. Inside a computer is an extremely regular environment, that the Santa Fe Institute tries to push into complex spaces. The real world is a really complex environment that [SFI] tries to push toward regularity. The problem is, you can’t get Reality for Windows. Even if you could, you wouldn’t want to, and even if you did, the computer would be so large that you’d have to terraform the moon just to be able to handle the OS.

We cannot overcome that sort of irrationality. Just because you see something bopping about on a computer screen doesn’t mean you can immediately render it and make it work in the toy store. This has been tried, and it always fails.

SFI is doing a lot of really great work. They have basically changed the face of complexity science. But the fact is that as long as they are trapped behind their glass screens — computer screens — I’m afraid that they won’t have any need of my services.

It sort of makes sense that you work for the government, because there are a lot of military and government applications for what you do.

No, the fact is is that there aren’t very many military or government applications, In fact, I’d reallly like to get out. But the problem is is that this is a very new concept in robotics, and unfortunately it’s just not popular. I’ve stood up in front of entire audiences with machines that don’t have a single computer inside them, and they kicked butt. Sorry, they kicked ro-butt!

I used to go around to an awful lot of international competitions — Micromouse and all-terrain and walking vehicles and stuff like that — and I just put my machines down and they just stomp on M.I.T.’s head.

And after a while I’d discuss it, and I suddenly realized that I wasn’t’ gaining too much popularity. You show up with a device which essentially does things by itself, and you just sit there with your hands in your pockets. It just basically gets a lot of people pissed off. Because when somebody has spent the past ten years building a six-legged bug and it barely gets off the ground, and you built something out of a couple of dead Walkmans and it basically just kicked them off of the sumo stage, it tends to make people angry, because they love their computers. I love my computer, but I know that I’ll never give it legs; it can’t use ‘em!

You mentioned that one of these things interacted with your cat. Any other interactions with real living beings besides humans?

This machine right here — you can probably still see it. There are scratches just behind its neck. The first time we ever demostrated it, at a friend’s house, this thing was static. But when it powers on, it comes on in this incredible, walking spider sort of way.

My friend has three giant husky dogs, and the first time they ever saw this thing move, they went nuts. I was able to grab one, my friend was able to grab another but we weren’t able to grab the third, who was basically chewing on its neck.

And it’s amazing, because when it was off, they didn’t pay any attention to it. But when it started to move, all of a sudden it was a threat. What did this thing have that essentially caused them to threaten it? These are dogs that have had radio-controlled cars run over their legs, you know, like multiple times, by young boys. Why did this thing basically show a threat to them?

We don’t know, but that just shows the sort of degree of what we call the Purring Test. When something moves in an animal-like way, moves in the sort of spectrum that basically makes you think that it is not a predictable device like a wind up toy. Right? Then all of a sudden it makes other animals recognize that sort of thing immediately. And it’s very strange.

We can see it ourselves, every time we a bad special effect in a movie. Look at the dinosaurs in Jurassic Park. When it first came out, it was amazing. But now look at it and you suddenly realize that the animals really were stiff and periodic, and obviously they matched the human beings who were doing all the motions for them.

The other extreme is R2D2, which is a creature with no ability. But because it was so alien, we were able to fill in our own expectations of how it would behave. And you get an awful lot of these, when you see people who sort of talk through their puppies. You know, like “Oh, Mr. Foo-Foo doesn’t like that.” Not that Mr. Foo-Foo has any opinion. He’s just overfed, stuffed and spoiled. Like a lot of children I can mention.

The thing is is that that degree of complexity is something that our devices do automatically. We can’t quantify it at this time. But we do know where it comes from. We also know that there are some very interesting reactions that we’ve seen from animals and young children, which makes us think that there may be a bit more of a future for it — it’s a lot more acceptable.

You’re teaching this stuff to students, right?

As much as we can. We run the International BEAM Robot Games. It’s now on six continents, and we have about 20 or 30 workshops a year. And always one really big, official robot games. The winners of all the smaller games go there and, basically, sort of beat eachother up on national TV, and that usually works very well.

The rules for the game are very simple: One: your robot must do whatever it does by itself; and two: cheating is encouraged. Putting people in that sort of role, we’ve seen things like creative uses of shaving cream — as both propulsion and weapon, which is just brilliant. We’ve seen devices using smoke bombs. We’ve seen a kid who came out of nowhere, and built a beautiful, high-quality walking machine out of a broken umbrella. I suddenly realized — what a beautiful place to get the uniform, complex, lightweight legs that you need for a walking machine — broken umbrellas. How many of them are out there? That’s the sort of brilliance. You look at that thing and say, “Give that kid a special award.” And this is what we do, because this show is designed to give awards to people who personally build the devices.

There are many competitions out there where you get 20 or 30 kids who work on a solar racer, or work on some sort of basketball-playing device. But when you actually go through all the kids, and all the cheerleaders, and all the promo people, you find that there’s usually one person in the back — that’s the person who did the building. And that’s the person who knows how to work with his hands but has no idea how to work with a camera. And that’s the person I want out front. Because you get them to talk about their stuff, and before long, all of a sudden they’re not standing in front of their machine, they’re standing in front of the camera, telling you exactly what their opinions are. And this is something I think is very necessary.

The 20th century was when the engineer was always in the background, while the guys in suits and nice hair stood out front. Now, I’m saying, the engineers have got to stand up and talk themselves. If you’ve got an idea, you’ve got to say, you’ve got to show, and you’ve got to do. No more philosophers, no more speculation. “One day the robots will eat our underwear!” No. But I know that because I’ve got ten years of experience with it.

The only thing I have to worry about is when my mother visits. Because then I have to pick up all of my robots and put them carefully away. Because she’s seen that episode of Doctor Who, and she knows that the robots will rise up against her in the middle of the night. No. And no matter how much I talk to her, I can’t convince her otherwise.

But I do know that in the real world, ultimately, kids are going to see these things. And the first time they see them, they’re going to play with them, try to destroy them. But after a while, their rooms will be clean, and they won’t even notice. And they’ll start to complain about brand new things that’ll happen in the future.

We’re trying to get those kids kick-started.

And that’s the whole thing about the BEAM Robot Games. Not just the ability to be able to build something by yourself, and build up your confidence and skills. But also to be able to build your confidence with interacting with people to present your skills.

You’re also selling the robots on the web.

We have a major center called Solarbotics, where we sell kits. But we also have tons of plans, web sites, pictures, movies, and of course we’re in syndication Hell on Discovery Channel, Learning Channel, BBC, ITV, all the appropriate things.

We’re very popular on TV for one very major reason: our devices work. I can put a dozen machines on a table and I will be your robot wrangler for the day. And the camera operators love that, as compared to something like, say — well I won’t actually mention the name of the organization, but I’ll just say that they got 300 roboticists and one working robot, which was broken the day that they showed up with the camera. That’s just inexusable. It’s a classic example of the complexity barrier. It is so easy to throw a huge amount of effort at something which does very little. The trick is, which is basically nature’s trick, is how to get a lot out of very little.

Where is Mark Tilden now? A few years after our interview, he left the lab, moved to Hong Kong to work for Wowwee Toys and produced a series of commercial toy robots, including Robosapiens. But he seems to have disappeared from the internet for the past decade, and Google is no help. If you can help reconnect me with him, I’d be grateful.

Thanks to AI! The images in this article were pulled off of a DV video cassette and were extremely low resolution. Generative AI helped to enlarge and enhance them.