Sensors, soft resistance, and AI as social imaginary

An interview with artist Mona Hedayati

This is an edited transcript of our interview. You can read a more condensed version here.

Q: Do you prefer work that immediately communicates what it’s about, or remains mysterious?

A: Because I’m an experiential and intuitive person, for me the work works when it works. If I have to go to a talk to understand what the work is about, or read ten pages of didactic text, even though I’m a researcher, to me there’s a clear rupture. To me there’s a clear distinction between work you show at academic conferences and in the real world.

When you put something out there for the public, work that’s too academic just won’t work. Something needs to talk to them, they have to be able to connect to something. So I really try hard in my work to make it visceral, but at the same time to have a strong conceptual backbone.

For work that’s purely experiential and performance-based and visceral, like some of Marina Abramovic’s work, there’s often nothing other than, “We are human, we are embodied and we connect.” The other side, the intellectual work, is supposed to lack all that. But it doesn’t need to be like this.

So my work falls into that crack in between. Technical people see my work and say, “Oh, your work is not about AI actually.” And that’s true — my work is not about technology at all! If I have to write something about it, I can talk about all this background — why things are the way they are — it’s a subversion of tech, it’s what I call soft resistance. But that’s not front and center when you see the work.

Tell me about your latest performance.

You see me sitting on the floor. It’s dark — you barely see me or anyone else around, and everyone else is also seated on the ground. I have a laptop in front of me. I look around and make eye contact with people. Then I pick up a wristwatch-type thing and put it on. And then the sound slowly starts. It starts with the sound of someone breathing, with different rhythms — longer, shorter, gasping. I used a sound design software that builds up volumes of sound based on particle systems, so it multiplies whatever sound you have by 100, 1000 or even 100,000. And when large volumes like this are build, it becomes difficult to tell what you’re hearing. But I start with simple, original breathing rhythms, to help the legibility of the work, then it builds up, eventually coming back to the original breath.

After about a minute, you realize that these breaths are forming a kind of rhythm. From there, it keeps evolving.

It looks like I’m just doing something on my computer, and I look maybe slightly stressed. Halfway through — it lasts about 20 minutes — a projection starts on the wall. It shows a sequence of three videos, each one is split-screen. In each, you see two moving images — one moving slowly, the other one faster, but the same thing. The images are also heavily filtered — like military footage. You see movements but can’t make a coherent narrative — people running in a street, there’s something like a gun at some point, a suggestion of police violence. Basically, between representation and abstraction, you can’t easily put the pieces together.

After looking at my laptop for a while, I get up and go and sit with the audience watching this. Once the video ends, I go back to the center.

The sound is live. The machine learning part is not — it consists of outputs I got when I trained this model on maybe five hours of breathing patterns — when I was watching videos of the recent protests in Iran, where I come from. I’m sitting on a Persian rug.

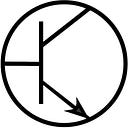

The live sound is my own recorded breathing patterns, manipulated by sensor readings as I’m watching. There are four sensors on the wrist-worn device. First is galvanic skin response, which is skin conductance — the hallmark of your stress response. It’s used in lie detectors and biofeedback. Then there’s a BVP [blood volume pulse] sensor, which takes your pulse rate, plus derivatives like your blood oxygen level, heart rate, inter-beat interval — which is also great for measuring stress because once you get stressed the length of the beats is what changes. And then there’s a thermometer that takes your skin temperature, and an accelerometer for movement.

So the idea is that you’re watching this, and your responses to those images are changing the sound.

Exactly. I spent six months watching the news every day of what was happening, and collecting my own data. So the live performance is re-enacting this ritual that I was doing every day.

Is it about image and representation? Is the machine learning part of it conceptually — algorithms and all that?

I did this work thinking that I would use machine learning as a temporal management technology. Because there’s no way I would look over these six months of spreadsheets of data, and be able to do anything with them. I would have to use some statistical techniques, some dimensionality reduction, or some thresholding to min/max the data. Six months worth of data from four sensors, plus the audio recordings.

I looked at VAE — variational audio encoder — it’s a generative model used for time-based stuff. It uses neural networks. How it works is, basically it takes in your input data, and it encodes the data into a lower-dimensional space called latent space or encoding space.

It’s not like PCA — principal component analysis — which works with a linear dimensionality. VAE is nonlinear, so you have no idea how the dimensionality reduction happens. Based on the latent space representation that it builds based on the original data, the decoder then rebuilds the data. You get a whole bunch of output data that can be considered synthetic data. Or it could be a way of predicting what your data looks like — something that resembles your original data. In creative applications, people look for variations on this output. You can move a point in the latent space to get variations, to see what else the model can come up with.

So I had this dream that I wanted to use a generative model. Once you’re in this geekspace, you sort of become polluted with all the details — “This is so cool that the machine can do that!” Unlike conventional statistical techniques that the operation is sort of clear. But this is so cool, because you have no idea. Or diffusion models — the same thing. How does it do this? It has that aura of something magical happening.

This is the same rhetoric as in the industry — they use this word magic a lot. And when you’re around this geekspace, it’s contagious. For me, there’s absolutely no need to use such a complex process to make sense of this sensor data. Deep learning is for a million rows and columns in a CSV file; this is a library, it’s manageable. So I talked myself out of it. For me the data is meaningful — it’s about my stress response based on my background as a migrant, how I navigate my identity, all this complex stuff about my experience. This data goes into the VAE and no no one knows how the dimensionality is reduced. There is no way to make sense of how the sensor data was compressed.

But for the sound, I used machine learning. Because I had a lot of WAV files. I didn’t want to cherry pick from the library — what do I pick? So instead of doing that I fed it all into the machine, and I thought I would get an “aggregate image”. But surprise surprise, I didn’t get that. Because of all these mysterious processes. But also, when signals don’t have much information content and don’t have that much fluctuation from the baseline —

— because information is surprise.

Exactly. If it doesn’t have much of that, it just doesn’t see it, or it treats it as an outlier, as noise. Subtlety is lost.

I thought I would just record my breathing patterns, but what I got was ambient information. I was watching most of the videos without sound — first of all because I just couldn’t take it, I was doing this every day, I had to maintain my mental health. In all these videos, people are screaming and shouting. But sometimes I would get curious, would need to know what they were saying. And that would of course leak into these recordings. Those parts it reconstructed perfectly. However, they don’t make any sense. In terms of intonation, the quality of the sound, the speed — all the aura of speech is there, but the meaning isn’t there.

And it’s the same with LLMs. Do they know what words mean? No. It just deals with signals, it doesn’t deal with words, so it tries to reconstruct the signals. This is super interesting.

How does the machine learning relate to your identity, as a migrant for example?

One thing that informs what I do is posthumanism: to think, “What is this human-machine collaboration, what does convergence mean and what can it do? In what ways can it augment or hide certain things?”

Why breathing?

Because my whole research, on a practical level, is about biosensing — thinking about how these embodied rhythms can be datafied, but at the same time, how can I leverage that possibility to create an experience of embodiment for an audience?

Where did you learn the technical stuff?

Mostly I learned it experimentally. I took a course on sensors and electronics. And I did a whole bunch of reading. My partner is a computer scientist, so for years I’ve been around code. I can go on Github, find a piece of code and tweak it to work for my purpose.

What tools do you use these days?

Python for everything. Of course today there are lots of tools that help, like ChatGPT. I use VS Code. For machine learning, I just rely on open source stuff. If I run into any issues, I try to troubleshoot. And I also try to help other people when I can.

Tell me about the PhD — you’re about halfway through it.

The project was, I’m a migrant, and I can’t communicate how this feels to other people. Of course language is not a good container for emotions. So I’m looking for ways to try to create an embodied means of communication. That’s why the biosensors and things.

I didn’t start out wanting to use mainly sound; I wanted to use f different sensory media. But then as always I had to narrow it down.

At the moment I am adding interactivity to the project, and to make this performance more durational. It would be five or six people and me, all sending sensor readings. An aggregate signal isn’t interesting to me — I want to do it one person at a time, so they could hear the differences. This would be half a day or so, with food after the performance. I want to build some sociality around this, and in my culture everything is around food.

I decided from the start that I didn’t want to fall into this trap of abstraction. Neither do I want to over-dramatise, I don’t want documentary filmmaking — empathy-building based on image, but nor do I want to just make a cool sounding thing that you can’t understand what it’s about at all. Ever since Alvin Lucier in the 1960s, people have been making this biofeedback music with sensor-to-sound pipelines so it’s nothing new but food, for me, provides enough context.

Every once in a while, a memory pops into my head — random things that were totally forgotten. In a previous performance, I recited some of these, in my mother tongue (Farsi), and these were recorded and then looped, with the sound again manipulated by my sensor readings. It turned out to be a little too intense, when I talked to people about it. Even though no one could understand Farsi — in fact probably because of that. But for me it’s not about the intensity of the experience. It’s about understanding my state, and I didn’t have any traumatic experience during migration, or anything.

And it’s very multisensory as well, brings up memories and associations.

But also unknown terrain — if you’ve never tasted something before, for example. The unknown can be uncomfortable. I’ve worked on bio-art and edible art, so I do have a background there.

You’re wearing headphones — what are you listening to these days?

Sometimes just noise and reverb [laughs], to electronic, trip-hop stuff, to traditional Iranian music. All that psychedelic rock that I grew up with, when I want to remember that time. I grew up in a family that was really into music. Growing up in Iran, in the mandatory religious courses, I was just listening to bands like Pink Floyd in my headphones. So music was a way to section myself off from things that were imposed on us — an escape. But I never had any formal training.

I guess after the revolution in 1979, music changed a lot?

These days, a whole bunch of intellectuals in Iran listen to traditional Iranian tunes. Lyrically, it has to do with coassic Persian poetry. My family was like that, and kids, at a certain point, get sick of it.

We have distinct social classes, very visibly. Between people who haven’t had any secondary eduction, those who work in factories, versus people who’ve gone to university, maybe their family owns lands– same thing you see elsewhere. But if you belong to this “upper class,” you have much more access to Western stuff. When I turned 12 or 13, I just wanted a pair of Nikes, to listen to what I wanted. And you find yourself in this pseudo-space of East meets West but it’s not quite West.

Things have changed economically, there is of course a gap between our generation and the new one. They know what they want — they are upfront saying “No, I don’t wanna be forced to put this thing on my head!” we didn’t have that assertiveness and a say in what we want! I was born in 1985. We wanted to do what we liked, but we were not as determined and we didn’t know how to revolt.

But young people today — they’re like, “Climate change? You adults are all WRONG!”

Exactly. They don’t take no for an answer.

In AI, things are changing super fast right now.

They are, but also they aren’t. There’s the “future around the corner” that never happens — that’s the staple of the tech industry since the 1950s when the term AI was coined.

Chris Wiggins book How Data Happens is all about the history of data — where statistics comes from in the 19th century, up to AI and machine learning. It’s super eloquently written and accessible — it’s not an academic book. He says that some fields are named after an object of study, like biology. And some fields are named after an aspiration, and that’s AI and machine learning. You know the history — the term was created in order to ask for funding. That didn’t go anywhere.

This recent push is just about transformers — stacking these layers, and once you have enough layers… But of course you can see absolutely nothing inside, and this is what they call the problem of interpretability. It’s pure computing power — it’s not very surprising. That’s why Noam Chomsky calls machine learning basically a brute force mechanism. Compute (computing power) plus big data. And you just create correlations in the data.

It’s kind of a black box on both sides — for example in your performance, some data goes into the machine, something comes out, and it has no semantic understanding, as you said. At the same time, you have all of that, and the machine cannot access it. So you’re a black box to the machine.

Artists usually just want to get some damn results out of the algorithm. They could care less about what goes on inside. There’s that shiny veneer of something you have no idea how it operates, but it brings you results.

Historically, art has always been about creating unique experiences for audiences. Now here’s this machine that can output things that are unique in many different ways as it doesn’t correspond with our logic. You can understand why it has such a huge lure on a creative level.

My view keeps changing on this. I’m interested to know whether you think machines can be creative in a way that we understand? In that sense can they be useful in creative work that humans do?

I don’t like to venture into this area. It’s like asking if ChatGPT “understands” — no, it doesn’t. Meaning is just not there. A machine has no idea what creativity means.

Does it matter, if the results become “useful” in some way?

Hito Steyerl calls this “the mean image” — as in, just average, but also images that, because of glitches in algorithmic reconstruction, can look just awful. I look at this from my background in anthropology: What you get out of the machine is basically a social imaginary. It’s shattered hopes and dreams, that’s what it is. You can call that creative, sure — it is if you feed it a bunch of artists’ work.

The way we process information has nothing to do with the way machine learning algorithms process information. It’s interesting because what we get out of the machine does not correspond to our normative form of information processing and that’s mesmerising.

If I give you a stack of CSV files, there’s a certain way you would look at it: Do the numbers increase or decrease, how can I correlate these? But the machine can handle n dimensions.

What do you think about the idea that our society is based on rationality and classification and language, but there’s this other aspect of things we can’t easily classify or measure or put into words. Of course computers and AI are about numbers and rationality and classification. But is this other world possible, where we value those things that can’t be quantified or put into words — love, emotions, art, sensory experiences?

I think the more technology pushes forward, the more liberal humanism pushes back. Ideas about posthumanism — from Bruno Latour for example — got pushback: “Wait a minute — we don’t even know how to be human yet. So let’s not go there.” Extreme forms of posthumanism flattens the idea of what a human is. I think we’re moving to a space in between — of distributed cognition, between humans and technology.

Particularly after this push for generative machine learning, there is a push to slow down a little. Who builds or creates the truth? Let’s think a little more critically about this symbiosis that’s happening. It’s a way of thinking more about humanism, not posthumanism. Let’s see where we’re heading.

And this goes for regulation, in policy — how can we bring the public into these consultations?

Do you think in that sense that we can learn something from machines — specifically from machine learning — about humans?

That’s what a lot of people are trying to say. But it’s not about simply addressing bias and the ethical issues, but more broadly understanding what’s happening to us. AI is just a mirror of who we are and what we think. It’s a social imaginary.

Are you generally optimistic or pessimistic?

I think things are gonna keep moving, and I think there’s a good level of awareness that things could go wrong, even publicly in lay understanding. If schools and universities are already drafting guidelines, it’s no longer this niche thing that only a few people use or know about.

I don’t think there’s any way of going back or slowing down. I do think the changes are not going to be as exponential as some people think.

I’m more worried about the artistic level. I don’t care about the copyright issues — if someone wants to use some of my work, go for it. But look at art history — once something comes along, it’s going to be exploited. Artists need to eat, they need to produce work, and therefore ride the bandwagon. They’ll use and abuse any tool or technology.

Any particular artists that you like these days?

Stephanie Dinkins is someone I really respect. Using technology, not for its own sake but to tell her own story. Artists do this with whatever craft — some artists with textiles, some with technology. But I don’t necessarily think of technology as merely a tool — as a posthumanist I think about distributed cognition and all that.

What I mean is people who make critical work using technology, not necessarily “about” technology. And not only the glossy, superficial use of technology — the bigger the image, the louder the sound, the more people are attracted. And sometimes that becomes the end point.

In a way it comes back to this question of whether an artwork communicates something intuitively, or whether you give the viewer some information takes it to another level.

Umberto Eco talks about this in his book The Open Work. As a traditionally trained artist, I believe that materiality creates imaginary. We don’t need to close that imaginary. In my performance, I could just visualise my sensor data, but I don’t want to make the work too didactic. I don’t see this as a weakness but as a gesture of generosity — for it to do things to different people with different mindsets, at different levels. I don’t wanna spoon-feed people with my drama. I’ve performed it at music festivals where people just enjoyed the sound, without caring about any of the content. That’s totally cool with me. And I’ve performed it in critical spaces where people didn’t care about the sound, just wanted to know, what am I doing? What is this about? Having a didactic text on the wall is fine, but trying to integrate that into the work, I’m not a fan of.

Of course most of my time spent on a work is just play — messing around with the technology. You never know — the machine just doesn’t behave the way you want it to, a lot of the time. “Oh it was working on my laptop just two minutes ago!” I used to get frustrated with all this stuff, but I’ve learned that if you work in this realm, you have to accept the unexpected.

So what are you working on now? What’s coming up?

I’m gonna do this performance again in Portugal. I might change things a little bit in the act, not the sound. Over the holidays I wanna work on this sonification pipeline.

And finish writing some stuff about all these issues. One is about how the body and data are at odds — I explore this at a micro level by tracing a sensor reading from biosignals to digital signals to machine learning to output. If the body is lost somewhere in this pipeline, what could we do to remedy this? Art and critical thinking are one way. I use a method called “data visceralization” to analyse this — “data selves”, cognitive assemblages, how are agencies cut?

Visit Mona Hedayati’s website.